Underestimating Rural Broadband Coverage

One of the ways mass media fails the public interest is by choosing to “titillate, rather than educate and inform,” as Chief Justice Berger wrote in the Supreme Court’s 1981 Chandler v. Florida opinion. This propensity is on display in today’s LA Times story on the dispute between NOAA and the FCC on the potential for 5G interference with ATMS weather satellites.

The Times writer mentions the irrelevant fact that Ajit Pai briefly worked for Verizon many years ago but fails to mention that NOAA has still not published its engineering analysis. Another common failure is simply failing to present the facts accurately and correctly.

The Atlanta Journal-Constitution committed both offenses in a recent story titled “Internet far slower in Georgia than reported.” This story substituted advocacy speed tests for impartial, technically accurate ones, and also substituted the FCC’s broadband deployment report for its speed test report.

Speed Testing is Hard, but Not That Hard

We’ve written volumes on the Forum on broadband speed testing, and we’ve also written conference papers on the subject. To get accurate speed tests you have to isolate extraneous factors that influence observed speed and you have to measure between rationally chosen points.

Speeds of local Wi-Fi networks vary a lot, so you should measure wire speed directly on the wire. Personal computers can be slow or heavily loaded, so you have to run the test on something like a SamKnows white box (as the FCC does) or design it to overcome local effects (as Speedtest by Ooka does.)

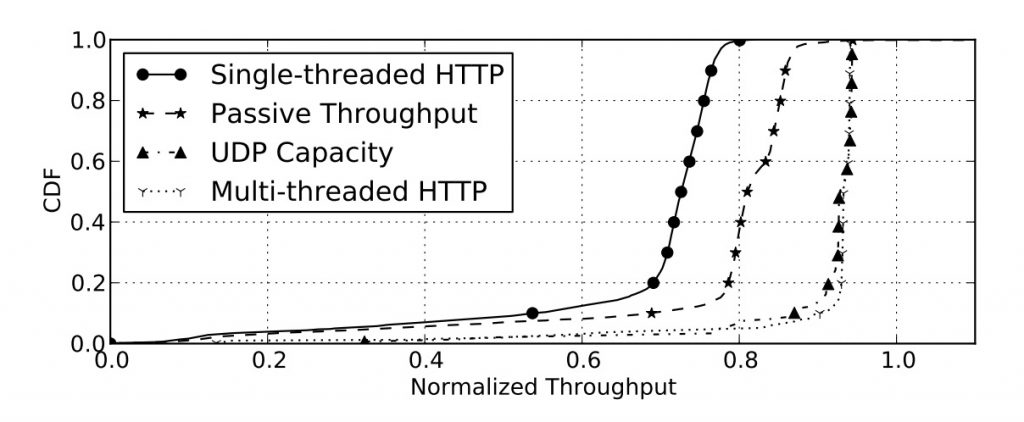

It’s impossible to saturate a broadband link with a single TCP stream, so you need to run multiple streams in parallel and sum their results (as Speedtest does) or you need to throw out all measurements except the fastest one at each source (as Akamai’s Average Peak Connection Speed measurement does.) You also need to use a test server that can keep up with the fastest broadband connection, as everyone but the Google/Open Technology Institute M-Lab system does.

Four Errors in M-Lab Design

The credulous AJC writers got their information from M-Lab, a system that has been shown to radically understand broadband speed by design. It only uses one TCP connection, so it’s incapable of filling the broadband pipe to capacity. According to MIT’s research, M-Lab’s NDT servers fail at this task: “In the NDT dataset we examine later in this paper, 38% of the tests never made use of all the available network capacity.” (p. 2)

This fact is so well known that modern browsers use 8 – 16 TCP connections at the same time. TCP is a way of slicing broadband capacity up so that multiple applications can share a single wire. A single TCP connection can’t fill a pipe more than 80% full, even at 10 Mbps.

Second, speed tests are run between a device under test and a test server. If either end of the test is underpowered, you’re not measuring the connection as much as you’re measuring the connected device. M-Lab servers are underpowered for a global network. And measuring gigabit networks is especially challenging even with adequate resources.

Oddball Design Choices

Thirdly, M-Lab employs a bizarre geo-location algorithm to match a test user with a test server. Sometimes, running an M-Lab test from my office in Denver causes M-Lab to choose a test server located in Denver that was three networks away, …den01.measurement-lab.org. This server reports 202.07 Mbps because the route to the server goes through Dallas and Albuquerque on the Hurricane Electric transit network.

At other times, M-Lab choses den04 or den05, also in Denver but routed more directly via the Telia transit network. This test reports a speed of 413.87 Mbps, much better but still slower than the 460 reported by Speedtest. So my real speed depends on which transit network the service has elected to purchase and not on my broadband provider.

Finally, NDT uses a system known as “bottleneck bandwidth and round-trip propagation time” (BBR) to evaluate network capacity instead of probing for maximum capacity. BBR is meant to be used in production settings to share bandwidth politely within an enterprise, so it has its uses. When used for capacity measurement it is guaranteed to underestimate capacity because its goal is nice sharing rather than peak performance.

Compounding Underestimation Errors

AJC’s source, the Google-sponsored M-Lab consortium, has also provided data to allies that wrote a report on rural broadband deployment and performance in Pennsylvania. The M-Lab-allied researchers attempt to geo-locate NDT tests on a map and then to visualize service boundaries around theses guesses about location.

The method is fraught with difficulty of three kinds: 1) NDT understates speeds; 2) locations are inaccurate; and 2) the absence of a test in a given area isn’t proof that there is no service there. The M-Lab friends admit part of this:

However, even with this enormous compendium of data, the best one can do is create a picture of the general areas where tests have come from and where there are “voids” where no tests were received. In essence, the project is “painting” a pointillist-style map where each test is represented by a “dot” and collectively, these dots help us determine areas that may either have no connectivity, or where no one ran a measurement test.

So why publish a claim about areas lacking service that is guaranteed to underestimate broadband performance and deployment? Researchers could do much better by utilizing Speedtest by Ookla data because performance estimates are more honest and the significantly larger database of tests allows for higher resolution mapping.

Conclusions

The only conclusion that I can draw is that the M-Lab Consortium wants to understate rural broadband deployment in order to overstate the rural broadband gap. There certainly is such a gap, so honest estimation isn’t going to make it worse.

We can also conclude that journalists are struggling to understand the issues around broadband performance and mapping. This has always been the case, but only recently has misunderstanding of broadband performance been harnessed as a tool for increasing state subsidies for rural networks.

There’s an ethical question behind the M-Lab campaign to exaggerate the rural broadband gap: Is it justifiable to misrepresent the facts (to lie, actually) in order provoke a legislative reaction that improves rural life? If we believe that the end justifies the means, we’re going to be OK with this deceptive campaign.

But if the campaign succeeds and the legislatures of Georgia and Pennsylvania shift funding from other priorities to rural broadband, will we be able to detect progress? We should see some, but probably not as much as we should.

So it’s probably best to follow the well-worn path of truthfulness: honesty is the best policy for improving policy, as President Lincoln might have said. Rural broadband needs improvement, but we needn’t lie about how much is needed and where.