EFF’s Engineers Letter Avoids Key Issues About Internet Regulation

One of the more intriguing comments filed with the FCC in the “Restoring Internet Freedom” docket is a letter lambasting the FCC for failing to understand how the Internet works. The letter – organized and filed by the Electronic Frontier Foundation – recycles an amicus brief filed in US Telecom’s legal challenge to the 2015 Open Internet Order. The FCC letter fortifies the amicus with new material that beats up the Commission and stresses the wonders of Title II.

The new letter is signed by 190 people, nearly four times as many as the 52 who signed the original letter. Some signers played important roles in the development of the early Internet and in producing the 8,200 or so specification documents – RFCs – that describe the way the Internet works. But many signatories didn’t, and some aren’t even engineers.

The letter includes a ten page (double spaced) “Brief Introduction to the Internet”, a technical description of the Internet that seeks to justify the use of Title II regulations to prevent deviation from the status quo. The letter mischaracterizes DNS in a new section titled “Cross-Layer Applications Enhance Basic Infrastructure”. More on that later.

EFF Offers Biased Description of Internet Organization

Any attempt to describe the Internet in ten double-spaced pages is going to offer a big target to fact-checkers. This is only enough space to list the titles of the last six months worth of RFCs, one per line. But we do want something more than an Internet for Dummies introduction from the people who offer their expert assessment of the facts as justification for a particular legal policy. Unfortunately, the letter comes up short in that regard.

The first two and a half pages simply say that the Internet is composed of multiple networks: ISPs, so-called “backbones”, and edge services. These networks connect according to interconnection agreements, which the letter somewhat incorrectly calls “peering arrangements”.

This description leaves out the transit networks that connect small ISPs to the rest of the Internet (for a fee) in the absence of individualized peering agreements. This is an important omission because the overview sets up a complaint about the tradition of requiring symmetrical traffic loads as a condition to settlement-free peering.

When transit networks interconnect, settlement-free peering requires symmetrical traffic loads because asymmetry would imply that one network is providing transit for the other. Because transit is a for-fee service, it obviously would be bad business to give the service away for free. The letter fails to mention this, but insists on pointing out that ISPs provision services asymmetrically.

This misleading description of the organization of the Internet is later used to justify settlement-free interconnection to ISP networks by the large edge service networks operated by Google, Facebook, Netflix et al.

EFF Provides Misleading Discussion of Packet Switching and Congestion

The Internet is based on a transmission technology known as packet switching, which the engineers describe by referencing an out-of-print law school textbook, Digital Crossroads: American Telecommunications Policy in the Internet Age, by Jon Nuechterlein and Phil Weiser. Nuechterlein and Weiser are both very bright lawyers – Jon is a partner at Sidley Austin and Phil runs the Silicon Flatirons Center at the UC Boulder Law School – but this is probably the first time in history that a group of engineers has turned to a pair of lawyers to explain a fundamental technology for them. (This reference leads me to believe the letter was written by EFF staffers rather than by actual engineers.)

The description of packet switching omits three key facts:

- Packet routers are stateless devices that route each packet without regard for other packets;

- Packet switching is a fundamentally different transmission technology than circuit switching, the method used by the telephone network; and

- Packet switching increases the bandwidth ceiling available to applications at the expense of Quality of Service.

The omitted facts would have been helpful in explaining congestion, an issue that the letter combines with its description of packet switching in a way that makes it appear arbitrary. Packet switched networks are provisioned statistically, hence any network that is not massively over-provisioned will undergo periodic congestion. Therefore, any well-designed packet network must include the capability to manage congestion.

Internet Congestion Management is a Troubled Topic

In the case of the Internet, congestion management is somewhat troubled topic. It was originally addressed by Vint Cerf through a mechanism called Source Quench, discarded because it didn’t work. Quench was replaced by the Jacobson Algorithm (a software patch consisting of two lines of code), which was at best a partial solution.

Jacobson’s patch was supplemented by Random Early Detection, which didn’t solve the problem entirely either. The current status quo is Controlled Delay Active Queue Management (CoDel), a somewhat less than ideal system that seeks to manage transmission queues more accurately.

The letter claims “the sole job [of routers] is to send packets one step closer to their destination.” This is certainly their main job, but not their only one. Routers must implement the Internet Control Message Protocol (ICMP) in order to support advanced routing, network diagnostics, and troubleshooting. So routers also have the jobs of helping administrators locate problems and optimizing traffic streams.

Internet tools such as traceroute and ping depend on routers for packet delay measurement; networks also rely on ICMP to verify routing tables with “host/network not reachable” error messages and to send ICMP “Redirect” messages advising them of better routes for packets with specific Type of Service requirements. The Redirect message tells the sending computer or router to send the packet to a different router, for example. So the sole job of of routers is to implement all of the specifications for Internet Protocol routers.

Two router specifications that go unmentioned are Integrated Services and Differentiated Services. The letter makes no mention of Source Routing, a system that allows applications to dictate their own paths through the Internet. While Source Routing is rare, IntServ is used by LTE for voice and DiffServ is used within networks for control of local traffic. Both are likely to play larger roles in the future than they’ve played in the past.

EFF Makes False Claims about Best Efforts

The letter claims all Internet traffic is sent at the same baseline level of reliability and quality: “Thus the Internet is a “best-effort” service: devices make their best effort to deliver packets, but do not guarantee that they will succeed.”

The Internet Protocol is actually more of a minimum effort system that lacks the ability to perform retransmission in the event of errors and congestion, but the Internet as a whole provides applications with very reliable delivery, at least a 99.999% guarantee. But it does this because all the networks cooperate with each other, and because software and services cooperate with networks. Hooray for TCP!

The term “best efforts” needs a better definition because it means two things: 1) The lack of a delivery confirmation message at the IP layer; and 2) the expectation that quality will vary wildly. The first is a design feature in IP, but the latter is not. Variable quality is a choice made by network operators that is actually a bit short of universal.

EFF’s Views on Layering are Mistaken

After discussing packet switching in a patently oversimplified way, the letter goes utterly off the rails in attempting to connect the success of the Internet to design principles that don’t actually exist. It sets up this discussion by offering a common misunderstanding of network layering: “the network stack is a way of abstracting the design of software needed for Internet communication into multiple layers, where each layer is responsible for certain functions…”

Many textbooks offer this description, but network architects such as John Day dispute it. In Day’s analysis, each layer performs the same function as all other layers, but over a different scope. That function is data transfer, and the scopes differ by distance. A datalink layer protocol (operating at layer two of the OSI Reference Model) transfers data from one point to one or more other points on a single network. Wi-Fi and Ethernet networks within a home or office are layer two networks that do this job incredibly well; they’re joined into a single network by a network switch with both Ethernet ports and Wi-Fi antennas.

A layer three network – using IP – transfers data over a larger scope, such as from one point on the Internet to one or more other points on the Internet. This is the same job, but it goes farther and crosses more boundaries in the process. So layering is more about scope than function.

There is a second misunderstanding with respect to cross-layer interactions. The EFF letter says the code implementing layers needs to be: “flexible enough to allow for different implementations and widely-varying uses cases (since each layer can tell the layer below it to carry any type of data).” This description reveals some confusion about the ways that layers interact with each other in both design and practice. Standards bodies typically specify layers in terms of services offered by lower to higher layers and signals provided by lower to higher layers.

For example, a datalink layer may offer both urgent and relaxed data transfer services to the network layer. If the design of the protocol stack is uniform, this service option can percolate all the way to the application. So applications that need very low latency transmission are free to select it – probably for a higher price or a limit on data volume over a period of time – and applications that are indifferent to urgency but more interested in price are free to make an alternate choice. The actual design of the Internet makes this sort of choice possible.

EFF Misconstrues the End-to-End Argument

From the faulty description of layers, the EFF letter jumps right into a defective explanation of the end-to-end argument about system design, even going so far as to call it a “principle”:

In order for a network to be general purpose, the nodes that make up the interior of the network should not assume that end points will have a specific goal when using the network or that they will use specific protocols; instead, application-specific features should only reside in the devices that connect to the network at its edge.

There’s a difference between “general purpose” technologies and “single purpose” ones that the letter doesn’t seem to grasp. The designs of the datalink layer, network layer, and transport layer protocols don’t assume that applications have the same needs from the network. Hence, the Internet design reflects a multi-purpose system designed to accommodate the widest possible set of use cases. This is why there is both a Transmission Control Protocol and a User Datagram protocol at layer four. The letter describes them accurately as a mode of transmission that values reliability and correctness (TCP) and one that values low latency (UDP).

For these two transmission modes to work correctly, lower layers – IP and the datalink – need to have the ability to tailor their services to different needs. We can certainly do this at the datalink layer, which the letter fails to describe. Datalink services in Wi-Fi and Ethernet offer the options for urgent and relaxed delivery. These modes are accommodated by the Wi-Fi 802.11e Quality of Service standards and Ethernet 802.1p options.

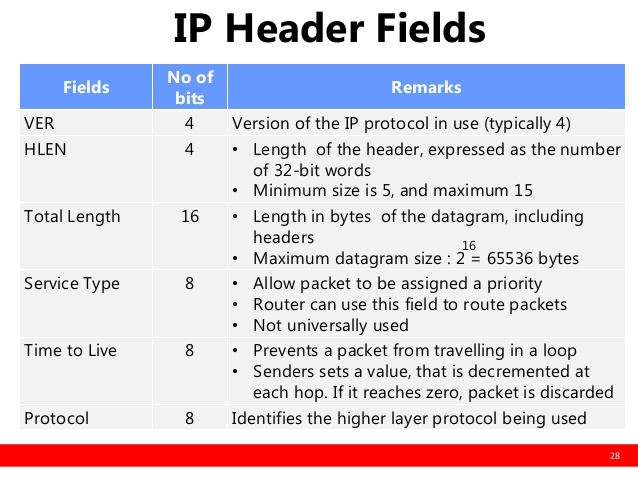

The IP layer’s ability to request Quality of Service from the datalink layer is implemented by the Type of Service field in the IP datagram header, and also by the IntServ and DiffServ standards. ToS is also a property of routes, and is supported by ICMP, a required feature of IP routers. So the Internet is a multi-purpose rather than a single purpose network.

You can still stress your belief in the end-to-end “principle” without pretending that the Internet lacks the ability to tailor its internal service to specific classes of applications. All the end-to-end argument really says is that it’s easier to develop applications that don’t require new features inside a network. It doesn’t say you have to pretend one size fits all.

In fact, the classic paper on end-to-end arguments (cited by the EFF letter) acknowledges the role of intelligence inside the network for performance reasons:

When doing so, it becomes apparent that there is a list of functions each of which might be implemented in any of several ways: by the communication subsystem, by its client, as a joint venture, or perhaps redundantly, each doing its own version. In reasoning about this choice, the requirements of the application provide the basis for a class of arguments, which go as follows:The function in question can completely and correctly be implemented only with the knowledge and help of the application standing at the end points of the communication system. Therefore, providing that questioned function as a feature of the communication system itself is not possible. (Sometimes an incomplete version of the function provided by the communication system may be useful as a performance enhancement.)We call this line of reasoning against low-level function implementation the “end-to-end argument.”

So we don’t need to pretend that a perfectly dumb network is either desirable or even possible in all application scenarios.

What Makes a Network “Open”?

Let’s not forget that the goal of the FCC’s three rulemakings on the Open Internet is to provide both users and application developers/service providers with easy access to all of the Internet’s capabilities. We can do that without putting a false theory of the Internet’s feature set in place of a true one. The EFF and its friends among Internet theorists offer us a defective view of the Internet in terms of packet switching, congestion, router behavior, layering, and the end-to-end argument in order to support a particular legal/regulatory argument, Title II classification for Internet Service.

Title II may very well be the best way to regulate the Internet, but I’m suspicious of any argument for it that does violence to the nature of the Internet as the EFF letter has clearly done. EFF’s omissions are troubling, as there’s a very straightforward argument for classifying datalink service under Title II even though Internet Service fits better in Title I.

I’ll have more to say about this issue before I file reply comments pointing to these and other errors in the EFF letter.

UPDATE: For more on this letter, see the next post.