What the FCC’s Broadband Tests Really Measure

The data in the “Measuring Broadband America” report released by the FCC on June 18th shows that Americans get the broadband speeds they pay for. The report plainly says (page 14):

This Report finds that ISPs now provide 101 percent of advertised speeds.

This couldn’t be any clearer. The FCC even places this finding in context by contrasting with the previous report:

The February 2013 Report showed that the ISPs included in the Report were, on average, delivering 97 percent of advertised download speeds during the peak usage hours.

So not only do the ISPs deliver what they advertise, they’re improving. So those of us who like using the Internet at high speeds have legitimate cause for celebration, and the bloggers and journalists who have complained about gaps between advertised and actual speeds in the past should take pride in helping to remedy the problem they used to complain about. The initial FCC Measuring Broadband America report in 2011 (page 17) didn’t summarize across technologies, but FCC market share data indicate it would have reported that Americans got 91% of advertised speeds if it had.

So the problem the FCC discovered in 2011 has been solved and we can move on, right?

Not so fast. While most of us are happy that the gap between advertised and actual speeds has disappeared, bloggers don’t pay the bills by reporting that everything is fine. There has to be a problem somewhere, or all that shouting about net neutrality and nationalized broadband pipes has to stop. Lo and behold, if we dig deep enough in the FCC’s data – but not too deep – we can indeed find a problem with DSL.

While cable, fiber, and satellite all deliver more than they promise, DSL sometimes does and sometimes doesn’t. It should come as no surprise that the DSL performance gap highlighted most of the blog coverage of the FCC’s report.

Ars Technica titled its story “Many Verizon DSL and AT&T customers not getting speeds they pay for”; The Verge declared: “DSL subscribers are more likely to be cheated on internet speeds, FCC says”; Slate cautioned: “An FCC Report Says U.S. Internet Should Be Awesome and Reliable, but Don’t Get Excited”; C|Net followed the Verge meme with “DSL subscribers more likely to get cheated on broadband, says FCC.” By contrast, Fierce Telecom was mild with “FCC: Fiber and cable broadband providers improve speeds, but DSL needs improvement.”

The most worthwhile stories were GigaOm’s “FCC report shows ISPs are faster than ever, but congestion is a problem” and Engadget’s “FCC report checks if your internet speed lives up to the ads, and why that’s not fast enough” because they didn’t try to manufacture controversy as the others did.

The situation with DSL highlights a major issue with the people who write about technology issues in the popular media, even the tech media: They don’t understand technology very well. If they did, they would appreciate the fact that there are two very different broadband technologies in the world today that go by the general label “DSL”.

Old-school, legacy DSL is properly called “ADSL” and the new form of DSL is known as “VDSL” (or VDSL2+, or Vectored.) There’s an even newer form of DSL called G.Fast.

ADSL goes back to the 1990s and generally provides 6 Mbps or less, while VDSL is generally 15 Mbps or higher. AT&T offers VDSL at speeds up to 45 Mbps, and CenturyLink offers speeds up to 40. The Vectoring option doubles these speeds, and G.Fast approaches Gigabit speeds. Pretty much all DSL upgrades these days are to Vectored.

So it’s not very sensible to lump systems together than span the range from 256 Kbps in some rural areas with ADSL and hundreds of megabits with the leading edge versions of G.Fast. But that’s exactly what the FCC does, so we can’t really be too hard on the bloggers who followed their lead; apart from accepting the government’s word verbatim, that is, which they rarely do when it doesn’t suit their purposes.

ADSL is always going to fall short of advertising claims that it can provide capacities “up to” a certain peak. That’s because speed is a function of distance with DSL, and ADSL lines can be very long (tens of thousands of feet.) DSL users who happen to be close to their ISPs’ switches will get higher speeds than advertised, and those who are farther away will indeed get lower speeds. VDSL runs on shorter lengths of copper wire and longer fiber backhaul; the ratio of fiber to copper in VDSL systems has led some regulators to classify VDSL as fiber even though the final half mile is copper. In fact, many of the so-called fiber networks in densely populated Asian cities are fiber to the basement and copper up to the apartment, even those running at 100 Mbps and faster. If the copper wire is short enough, very high speeds are possible just as they are in gigabit Ethernet over copper wire. The bottom line is that VDSL doesn’t degrade with distance the same way that ADSL does because VDSL wires don’t have to go very far.

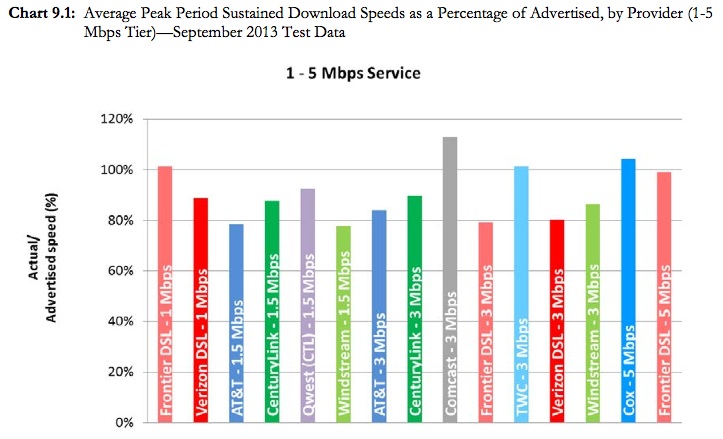

We can look closely at the FCC’s data and see how well VDSL does in comparison with ADSL because they break out performance by speed tier. The DSL in the 1 – 5 Mbps tier is going to be ADSL, and it falls below the promise. The DSL providers are Frontier, Verizon, AT&T, CenturyLink, Qwest (now part of CenturyLink) and Windstream. Only Frontier exceeds 100%.

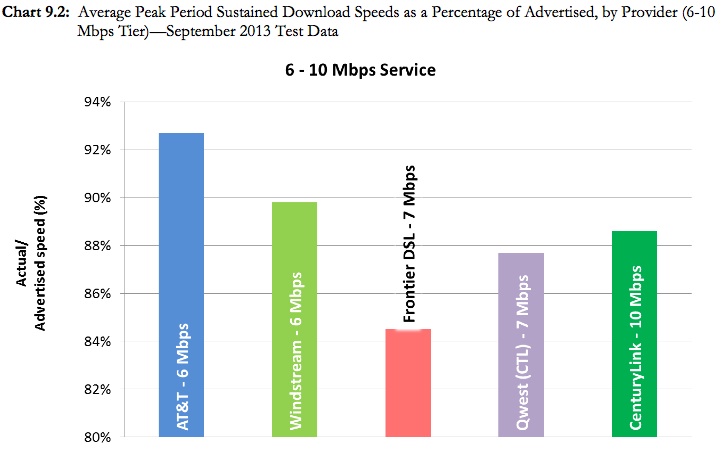

It’s even worse at the 6 – 10 Mbps tier, where all DSL providers fall short:

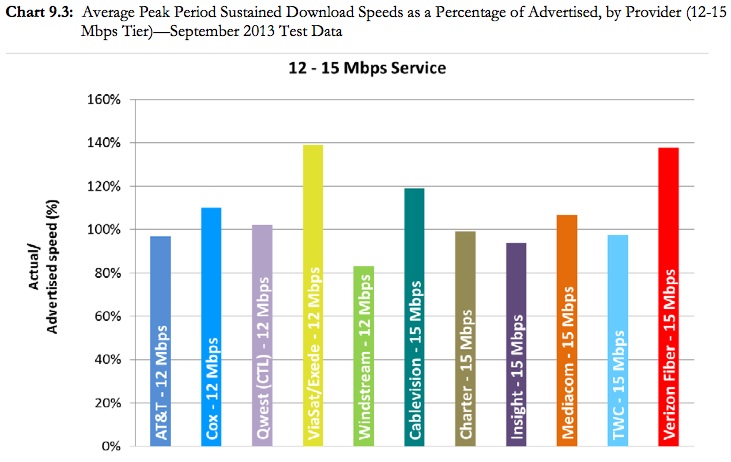

The FCC reports on a 12 – 15 Mbps tier, but the underlying technologies are ambiguous, as these speeds can be reached by ADSL2 as well as by VDSL. They show AT&T and Windstream falling short but Qwest exceeding 100%. It’s a reasonably good guess that AT&T and Windstream are using ADSL in this tier but Qwest/CenturyLink uses VDSL.

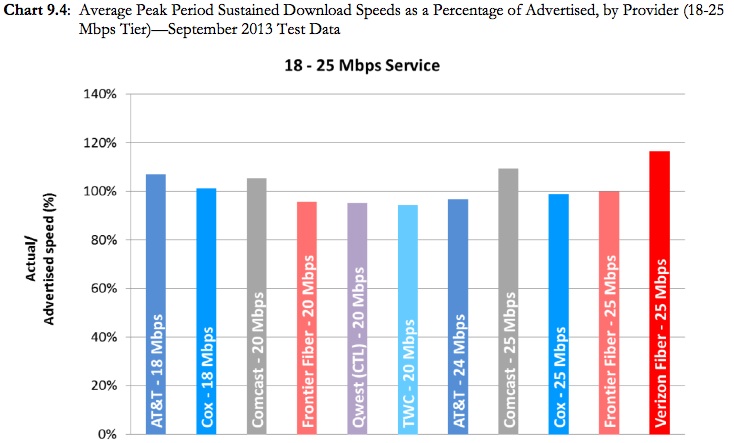

At the 18 – 25 Mbps tier, ADSL is gone and VDSL competes with cable and fiber. AT&T exceeds 100% for its 18 Mbps service, and falls a little short for the 25 Mbps plan, as does Qwest for its mid range VDSL plan at 20 Mbps. But the VDSL offerings are as close to 100% as most of the cable and fiber offerings in this tier are; Verizon FiOS, a full-fiber service, is the only real bracket-buster here, although Comcast exceeds 100% for both its offerings; Frontier’s 20 Mbps fiber service is comparable to Qwest’s VDSL and TWC’s cable.

The most competitive tier ranges from 12 – 25 Mbps, the actual sweet spot for most Internet applications.

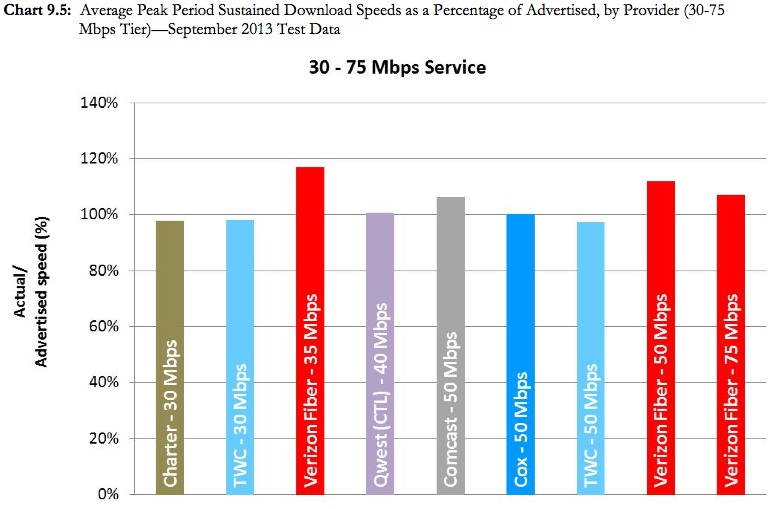

In the 30 – 75 Mbps tier, the FCC only measured Qwest despite the fact that AT&T offers services in this range. Qwest exceeded 100% at this tier as well. It’s worth nothing that the FCC refused to measure speeds above 75Mbps – both fiber and cable offer 100 Mbps services in most places – which is an odd choice that affects how the U. S. stands compared to the European countries were cable modem service starts at 50 Mbps and goes up to 150, such as the UK.

The FCC took limited measurements above 30 Mbps, excluding AT&T and the cable and fiber offerings above 75 Mbps

One takeaway from the measured speeds of DSL is that the FCC should distinguish ADSL from VDSL and report accordingly. Another is that it should measure AT&T’s VDSL services, not just CenturyLink/Qwest services. And it should measure higher speed fiber and cable services to put us on a level playing field with Europe.

Market shares are shifting very strongly from ADSL to VDSL. According to Leichtman Research, VDSL and fiber were the fastest growing segment of the wired broadband marketplace in 2013:

AT&T and Verizon added 3.3 million fiber subscribers (via U-verse and FiOS) in 2013, while having a net loss of 3.05 million DSL subscribers. U-verse and FiOS broadband subscribers now account for 47% of Telco broadband subscribers — up from 29% at the end of 2011.

Leightman lumps U-Verse together with fiber, but it’s actually a VDSL2+ technology, just like CenturyLink’s 20 – 40 Mbps VDSL.

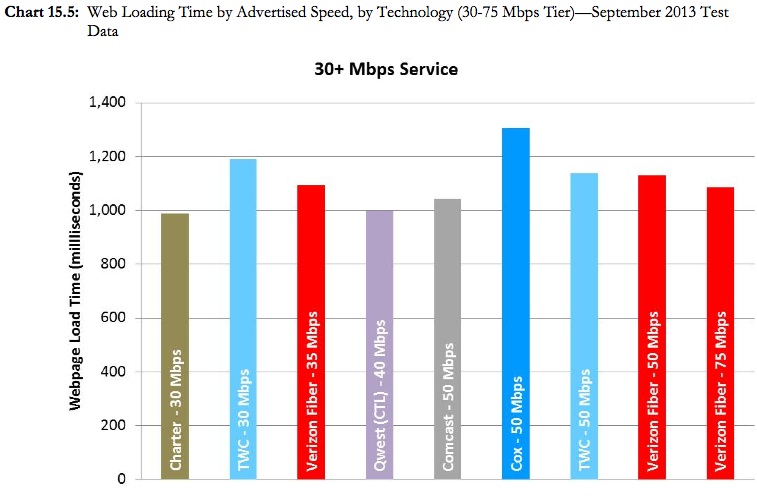

The FCC report also engages in a rather bizarre exercise of measuring web page loading speeds and attributing them to the ISPs. In a typical example, they found that web page loading takes more than a second over connections faster than 30 Mbps; they also found that their web pages loaded fastest on Qwest/CenturyLink’s 40 Mbps VDSL and Charter’s 30 Mbps cable. Both of these ISPs must be close to major Internet Exchanges.

Given that the average web page is 1.8 MB in size, the actual transfer time at 50 Mbps is less than 3 tenths of a second; that means the web server itself has more than twice as much influence over this measurement than the network does.

So the FCC’s web loading time test actually measures more of the web server and its connections than it does the ISP’s network. This is nice data for researchers to have, but it tells us very little about ISP networks. For this to be meaningful measurement, it also needs to account for CDNs and web site capacity.

It would be good for the FCC to clearly separate ADSL from VDSL and for it to measure speeds up to 1 Gbps. Once it’s done with that, it’s fine for it to try to get a handle on web servers and interconnections, but it appears that the FCC has a long way to go before it can clearly communicate what it’s measuring.

It’s also the case that our tech bloggers are well behind the FCC in understanding broadband, but we can explain that in terms of the advocacy journalism that’s polarizing our nation. It’s good to step outside the echo chamber and understand what the data really say.

Why should we trust the FCC?

Why should we trust anybody? The FCC pays a company called SamKnows to measure broadband speeds, the same firm that governments around the world use (European Union, UK, Singapore, Canada, etc.) So the raw data is comparable to controlled tests in other parts of the world and much more representative of actual network capacities than the Speedtest.net data. It’s not as good as Akamai under real world conditions, but we have to start somewhere.