That Pesky Spectrum Crunch

With the election nearing, we’ve entered the busy season for fact-checking. The convention speeches are examined line-by-line, and every claim that’s plainly false, possibly misleading, imprecise, or simply a matter of opinion will need to be explained, clarified, or retracted. This is a happy time for bloggers, since fact-checking is one of the things we bloggers do best. As Ken Layne famously said about the Internet’s role in relation to the media and politics, “we can fact check your ass!” So let’s fact check an article by The Economist’s technology writer Nick Valéry on the magazine’s Babbage blog concerning spectrum licensing, The last greenfield.

The New Beachfront

The article begins with the recent sale of unused AWS-1 spectrum licenses from cable companies to Verizon. There’s a minor error in the description of the AWS spectrum:

The AWS band uses frequencies in two segments, each 45 megahertz wide. One (1,710 to 1,755 megahertz) is employed by mobile phones to talk to the nearest cell tower. The other (2,110 to 2,155 megahertz) is used by phones to listen to signals from the tower. These frequencies are prized because they are ideal for densely populated areas.

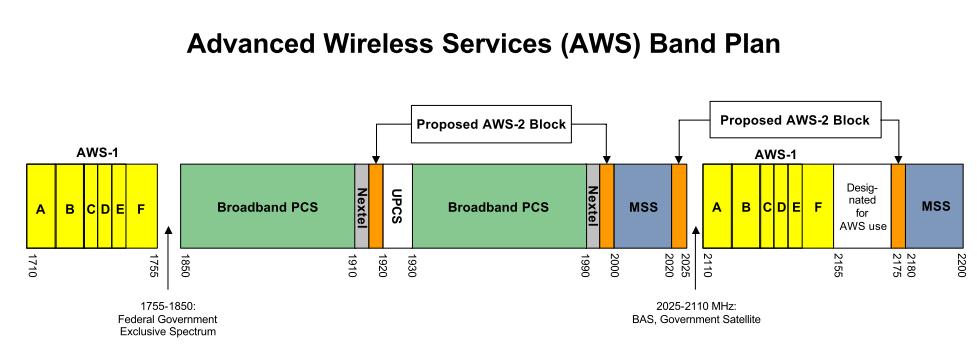

AWS, of course, consists of three blocks, not just one. The blocks are called AWS-1, AWS-2, and AWS-3. Here’s a somewhat dated FCC band plan for AWS:

A more recent FCC inquiry concerns AWS-4, so we’re not done with licensing AWS spectrum yet. Hence the “last greenfield” claim isn’t really a fact. AWS-1 is not even “the most recent greenfield” because the DTV transition created new greenfield spectrum. This is what happens every time a legacy system is taken off-line or improved: new greenfield spectrum is created because spectrum has no memory.

The more interesting claim is Valéry’s assertion that AWS is highly prized in densely populated areas. Haven’t we heard that the 700 MHz UHF spectrum freed up by the DTV transition is highly prized “beachfront spectrum” that covers large distances, penetrates walls, and leaps tall buildings with a single bound? We’ve even seen this description at The Economist, in Nick Valéry’s blog posts. And now we’re hearing just the opposite because the beachfront fantasy was never correct.

700 MHz is better at penetrating walls at a given power level than AWS, but it’s not an either/or proposition: both penetrate walls with significant signal loss. That’s the point of outdoor television antennas sitting on rooftops: They capture the signal before the walls degrade it, and feed it to the TV set by wire. So it’s not so much a matter of one or the other being “ideal,” it’s about what’s better. The logic that makes 2000 MHz better for densely-populated areas than 700 MHz suggests that 3000 MHz would be better still. In fact, holders of spectrum at and above AWS levels want additional spectrum in the 700 MHz range; Sprint and T-Mobile specifically. So let’s not create a new “beachfront” myth.

The Old Beachfront

Valéry clearly doesn’t understand how the 700 MHz UHF frequencies relate to the AWS-1 spectrum. In his analysis, UHF is now rural and AWS is urban:

Unlike waves in the 700 megahertz band, which travel long distances and penetrate all the nooks and crannies within buildings (that is why they were chosen for television in the first place), higher-frequency AWS signals have a much shorter range. But they can carry far more data or simultaneous conversations than UHF. That allows carriers to provide services to a greater number of customers within a given area. Having both UHF and AWS spectrum means Verizon is able to offer services competitively in rural and urban areas alike.

Again, not quite correct. Cellular networks are designed according to a hierarchy of coverage, even in urban areas. The first units of deployment are macrocells that cover a lot of distance and support 1,000 users, and the second units are smaller cells that serve fewer, more data-intensive users. It’s reasonable to use 700 MHz for macrocells and AWS for small cells, but any reasonable urban network can use both.

Panic Buying

The spectrum crunch is coming, but Valéry doesn’t seem to appreciate the efforts that all the carriers are making to deal with it. He attributes panic spectrum buying to the FCC’s projections of broadband usage growth:

What seems clear, though, is that the carriers’ current land grab is a panic response to projections made by the FCC for future traffic growth…In its National Broadcast Plan, released a couple of years ago, the FCC reckoned it would need to auction off at least 500 megahertz of additional spectrum by 2020 to meet this surging demand.

I doubt that carriers leave network planning to the FCC. They make their own projections, but both the FCC and the carriers rely on the visible trends. It was the National Broadband Plan, by the way.

Rewriting TV History

For some time now, Valéry has been touting the idea that analog TV sets could only receive broadcasts on odd-numbered channels due to interference concerns by the FCC:

To ensure that a broadcast could be received clearly, it was allocated a channel between two vacant ones. That is why the tuning dial on old VHF television sets had (apart from channel two) only the odd channels from three to 13. When UHF broadcasting came along, empty guard bands were similarly added to each channel, to prevent signals on adjacent frequencies causing interference (see “Bigger than Wi-Fi”, September 23rd 2010).

He cites himself as a source for this claim, conveniently. Here’s a picture of the channel selector on a 1976 Panasonic TV set for sale on eBay:

So there’s another factual error. The allocation practice in the analog days was to skip channels in each market, but to use the skipped channels in adjacent markets. There was no channel six in San Francisco, but there was one in Sacramento. It would be silly to go about channel allocation in any other way.

Fear and Loathing Had Nothing on This

From this point on, the article gets really, really weird:

The whistling noises heard on a radio and the echo of adjacent stations are not the result of some phenomenon of physics. They are caused simply by the failure of the receiving equipment to process the signal properly. Try moving the antenna, or replacing it with a better one, to prove that it is the processing, not some law of nature, that affects reception.

Um, no. Try using an AM radio in a building where wireless LANs are being developed and you’ll be very unhappy regardless of how your antenna is positioned. Analog services such as AM radio are so similar to electro-magnetic noise that it’s not possible to build a receiver that can effectively distinguish program from noise at any price. In order for a radio signal to be distinguished from the background noise that exists everywhere, the signal needs to be transmitted at a higher power level or it needs to be coded in such a way that the information it carries can be distinguished from the noise. We get to that by taking AM radio off the air and replacing it with a digital service that requires all new radios. That’s what we did with TV, but it’s not worthwhile for AM radio just yet, although it will be some day:

Better radios are the answer. Modern agile transmitters and receivers avoid interference by hopping to different frequencies if they encounter another signal. Such frequency-hopping was first used during the second world war. So separating different broadcasters—whether they happen to be mobile phones or television stations—by putting them on different frequency bands is not actually necessary.

Better radios will certainly allow us to use spectrum more effectively, but frequency hopping isn’t the answer and it’s not used by modern receivers. Wi-Fi tried it in its first version, the one that supported 1 – 2 MBps speeds. IEEE 802.11b dropped frequency hopping in favor of the “direct sequence spread spectrum” technique that provided speeds as high as 11 Mbps, and replaced that with OFDM in 802.11a and 802.11g running at 54 Mbps. The current Wi-Fi systems that go up to a gigabit per second have stuck with OFDM, increasing speed by using the channel more efficiently thanks to protocol tweaks and by using more spectrum. There’s no free lunch to be had, certainly not by frequency hopping schemes that simply create more opportunities for users to collide with each other. This is spectrum history 101.

Conspiracy theories are typical in this sort of analysis, and Valéry doesn’t let us down:

A study done last year by Citigroup, a financial conglomerate, reckoned that only 192 megahertz out of the 538 megahertz of licensed spectrum had actually been deployed. And 90% of that was being used by “legacy” 2G, 3G and 3.5G services. If this spectrum were repurposed, the carriers would have more than enough to build their 4G networks.

Unfortunately, there is little incentive to do so. For a start, it would mean investing heavily in advanced technologies like VoLTE (Voice over LTE) as well as new frequency-hopping transmitters, ultra-wideband equipment, software-defined radios and intelligent antennas. If they did, the voice traffic that is normally carried on legacy networks could travel along with data on LTE. And more subscribers in a given area could be served using the same set of frequencies.

That’s an awful lot of confusion.

In the first place, it can take years to put spectrum to use after rights have been purchased at auction because prior rights are geographic and subject to a number of subdivisions. It’s necessary to clear all or most of the legacy users before the new rights holder can deploy.

Secondly, auctions are so infrequent that would-be spectrum users have to purchase not only the rights they need right away in each auction, they also need to purchase all the rights they anticipate needing until the next spectrum auction comes around, which can be ten years or more.

Thirdly, large carriers can’t simply kick off the legacy users who have purchased their own phones and data adapters every time a new cellular standard is adopted. The lifetime of the devices is typically less than two years for the average user, but many people hold on much longer. The mean time between standards is on the order of five years, so most people are going to upgrade in an efficient time anyway, and those who don’t tend to be subsidized for upgrading as the carriers urgently need to re-purpose spectrum. This is foreign concept to the Economist’s European readers because the practice in the EU has been to license spectrum only for use with a specified technology, such as 2G GSM.

Fourth, VoLTE is not yet completely defined, so it’s no possible to invest in it. Carriers need to agree on common standards and have a variety of phones that conform to them, and we’re not quite there yet. And when we are, it will be some time before the user experience of VoLTE matches the 2G GSM voice experience. GSM isn’t good for data, but it’s superb at voice.

Fifth, cellular systems don’t use frequency hopping, nor should they. Frequency hopping has been considered obsolete by Wi-Fi since the mid-90s.

Sixth, cellular systems don’t use ultra-wideband, nor should they. UWB is a failed attempt at replacing Bluetooth, and it has no relevance in the cellular world.

Finally, software-defined radios and intelligent antennas have limited value in portable devices, offering at best incremental improvements while draining battery life in an unacceptable way. Not every wireless technology is suitable for smartphones.

Whew.

What’s Sauce for the Goose is Peanut Butter for the Gander

Valéry next sheds some crocodile tears for the broadcast TV and government systems that are among the largest and most inefficient users of spectrum:

But why bother when the FCC is squeezing the armed forces, NASA and other government agencies, as well as the television companies, to release more of their underutilised spectrum, so mobile-phone companies may prosper? It is easier to increase network capacity by adding spectrum than by developing costly new technology.

This is simply a double standard. In the previous two paragraphs, Valéry insists that carriers need to invest massively to improve network efficiency, but he wishes to excuse all other spectrum users of this solemn obligation. Whatever for? Are cellular carriers remarkable venal compared to TV broadcasters and the Pentagon? Is it wrong for firms who provide the public with a service they enjoy (and which happens to improve their quality of life, economic prospects, and personal safety) to prosper? I would simply oblige all users of spectrum to invest in efficiency improvements on a regular basis and to reduce their spectrum footprints for services with diminishing appeal such as over-the-air TV. Consistency should be the key.

Having said all of that, Valéry is still not satisfied that he’s managed to confuse his readers quite thoroughly enough, so he turns to the master of wireless misinformation, David Reed, to deliver the coup de grâce. Reed is a bit of an eccentric who participated in a very small way in the development of the Internet in the late 1970s while a grad student and post-doc at MIT. He spent the 80s and 90s working in the accounting field where he was chief scientist at Visicalc and Lotus during the early personal computer era. He returned to MIT for a brief spell before heading to SAP AG, an enterprise software company based in Germany that seeks to compete with the likes of Oracle. Reed hasn’t done any visible work in wireless systems: he hasn’t been granted any patents, published any papers, or developed any products, but for some ten years he’s been busily offering opinions about wireless interference and mesh networks to anyone who would listen.

Reed’s ideas about wireless networks are simply nonsense. He claims that signals don’t interfere with each other, but intermodulation distortion and inter-symbol interference are produced by signals doing just that. He claims that smart receivers can distinguish overlapping transmissions from each other, but fails to show how that can work when transmitters code and modulate their messages in the same, standards-based way, and he claims that cooperative mesh networks can replace cell towers without explaining how applications can deal with the delay that relaying messages introduces. Reed’s claims are transparently false and naive to the ear of any knowledgeable wireless technologist.

Most recently, Reed was one of three people cited by Brian X. Chen in the New York Times on the cellular capacity crunch in an article that had to be re-written with better sources. Valéry echoes Chen’s claim that the spectrum crunch can be permanently relieved if the carriers – and only the carriers – are forced to be more efficient. In fact, the final four paragraphs appear to be lifted directly from Chen’s spectrum articles, even though the previous arguments offered by Valéry were substantially different from Chen’s. Chen argues that the carriers hoodwinked the FCC and the White House into believing in a non-existent spectrum crunch, while Valéry claims it was the other way around and the FCC actually hoodwinked the carriers. Neither is willing to admit that the spectrum crunch is real.

Great Night at the Ford Theater Except for the Gunplay

Making up spectrum science out of whole cloth, rewriting history on the basis of false claims, downgrading the importance of cellular networks to ordinary people and making network service providers into monsters apparently sells newspapers and magazines and brings eyeballs to web pages, but such practices don’t form the basis of real engineering or sound tech policy.

It’s unfortunately necessary to confront such false claims until their publishers get their acts together or we lose all hope of achieving a sound spectrum policy. Here’s to hoping the former comes about before the latter.