Testing Vindicates LTE-U

A study of LTE-U’s interaction with Wi-Fi – and the interaction of real Wi-Fi access points with each other – appears to show that Wi-Fi users have nothing to fear from LTE-U and a lot to gain. The study was performed by Signal Research Group (supported by Verizon and Qualcomm, notably) and its essential difference with other studies is that it used real systems rather than the simulations concocted by Google and others. SRG found that some Wi-Fi access points are more aggressive than others; one was so aggressive they nicknamed it the “FU 2000” because it ignored the 802.11 standard and grabbed all the bandwidth it could without regard for sharing etiquette. In contrast, LTE-U leaves much more capacity on the table for use by Wi-Fi, so the biggest enemy of Wi-Fi is actually Wi-Fi itself.

This finding is very different from the results of a study comparing Wi-Fi to an LTE-U simulation conducted by Google, but the inconsistencies are easy to rectify. First, Google appears not to appreciate the variations between different Wi-Fi access points and devices. Wi-Fi chipsets are programmable with respect to their relative aggressiveness in the “listen before talk” (LBT) phase. They can pause for anywhere from 0 to 31 slot times before transmitting and still be in full compliance with the IEEE 802.11 standard; the listen time is supposed to be random within that range, but nobody enforces this assumption. In the SRG study, APs and devices were observed with varying degrees of aggressiveness, and this comes down to limiting the range of the random delay. There are Wi-Fi products in the field that limit the delay time to tighter ranges, such as 0 – 3, 0 – 7, or 0 – 15. For voice packets, some systems even go down to 0 – 1. This is perfectly legal.

The other problem with the Google simulation is its crudeness in determining when, where (in terms of bands) and how long to transmit without additional carrier sensing. SRG explains how this works in LTE-U in a summary slide.

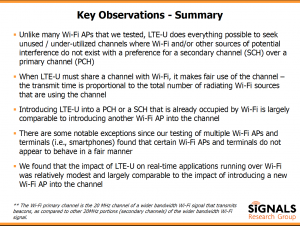

Rather randomly turning on and off in a random channel for long period of time, LTE-U does a lot of legwork to decide where, when, and how long to turn on. The LTE-U algorithm is designed to seek out a relatively unused channel, to limit its spectrum footprint to 20 MHz (Wi-Fi can go up to 160 MHz per bonded channel in 802.11ac), and to calculate a “fair share” of duty cycle depending on its assessment of Wi-Fi activity in the channel it uses: “When LTE-U must share a channel with Wi-Fi, it makes fair use of the channel –the transmit time is proportional to the total number of radiating Wi-Fi sources that are using the channel”, second bullet.

The duty cycle behavior of LTE-U then is not significantly different from the behavior of 802.11n (and subsequent variations of the standard) with aggregates. When using aggregated frames (AMPDU), Wi-Fi stations listen and then send up to 40 frames without a pause; that’s the upper limit, but practical systems will typically send 8 – 16 1500 byte frames without a pause. This doesn’t differ in a substantial way from what LTE-U is doing with its scheduler.

And even when LTE-U is transmitting its version of an aggregate, it doesn’t prevent Wi-Fi systems from transmitting. As the slide says, LTE-U prefers secondary channels to primary channels. So a Wi-Fi system that wants to use wide channels – 40 MHz, 80 MHz, or 160 MHz – will still be able to transmit over narrower channels (20, 40, or 80 MHz) at the very same time that LTE-U is transmitting. This doesn’t require special engineering, this the way standards-based Wi-Fi implementations work. This nice thing about this secondary channel preference is that it protects Voice over Wi-Fi, which runs perfectly fine in 20 MHz itself.

So it really is the case that LTE-U is designed to be more polite to Wi-Fi – especially Wi-Fi voice – than Wi-Fi is itself. This is what I expected, so it’s nice to see a study that appears to prove it. I say “appears” because the text of the study hasn’t been published yet. But barring a disconnect between the slides and the study, it’s apparent that Wi-Fi is not in jeopardy.