Streams are Fundamental to Internet Management

Last week, I criticized a blog post by the Center for Democracy and Technology for its failure to regard packet streams as an important component of the Internet:

CDT’s decomposition of Internet data formats is cloudy because it omits the most important element of Internet use, the stream. Packets, protocols, layers, and headers are much less important that the thing they enable, which is streams of data between users and users or users and services. Information streams are the most important element of Internet interaction, and any analysis of the Internet that fails to mention them isn’t very useful.

To understand the importance of streams, we have to step back a bit and build some context. Every network is a system for the allocation of resources, and the resources that communication networks like the Internet allocate are, obviously, communication resources such as transmission opportunities and communication “quality.” Other communication networks manage these resources as well, but they allocate them in different ways and at different times than the Internet does.

Networks of the Past Supported Single Applications

The traditional telephone network allocates communication resources at the time a call is placed: the initial connection sends a resource request from the caller to the called party and the network only rings the phone if it can reserve resources for the duration of the call. Because the network doesn’t know how long the call is going to last, the resources remain reserved until it ends. Calls placed when resources are not available are simply not connected.

The cable TV network is similar: it allocates cable channels to TV shows, and stops adding TV shows when the channels are used up. It allocates some channels to Internet service, phone service, and video streaming as well, probably doing so before the linear TV channel lineup is created, but that’s not important for this discussion because it was not part of cable TV’s original purpose.

The cable TV network also has some flexibility in that cable operators can compress TV shows at various bandwidths according to the nature of the content. Sports programs with a lot of fast motion take more bandwidth to transmit that talk shows, so the cable company allocates more “quality” to them. The telephone network doesn’t support different levels of quality; this is one of its greatest drawbacks, but it’s a side-effect of the telephone network’s purpose and regulatory status. If a network only supports one application, it need not allocate resources in a sophisticated manner; it simply has to make that application work. The telephone network’s strategy is known as “circuit switching” because it creates a virtual wire or circuit between the calling and called parties.

The telephone network was perfectly fine until we connected fax machines and modems to it. Because of the network’s single-application design, these digital systems had to adapt to the network’s limitations rather than the other way around. So if 100 people are silent while a modem user is trying to download a file, the telephone network can’t re-allocate quality from the silent phone calls to the download; the download simply proceeds slowly.

The Internet is a Multi-Service Network

The Internet was designed to support at least two computer-to-computer applications, remote login and file transfer. Remote login is when a terminal attached to a local computer is re-attached to a computer at another location. After login, the terminal acts more or less as is the remote computer were close by. File transfer is different kind of activity in that it’s both non-interactive and highly resource-intensive. If we think of the kinds of text-based files that were common in the early days of the Internet – memos and computer program source code – each consisted of the equivalent of hundreds if not thousands of minutes of sustained typing.

Unlike the cable TV and telephone networks, the Internet allocates resources “just in time” for their use through packet switching, a system that treats each unit of information as if it were independent of all others. Circuit switching makes resources go to waste by under-allocating them to ensure it doesn’t fall short, and packet switching wastes resources by resource contention, re-computing routes each time a packet traverses the network, and by various inefficient congestion management practices. Each of these inefficiencies has a remedy that is commonly deployed.

As Richard Epstein wrote in his 1998 Harvard Journal of Law and Public Policy article “The Cartelization of Commerce,”: “In trying to figure out why one form of economic organization is superior to another, we need to look at the consequences that each generates.” This logic applies to communication networks as well as economic organizations.

The Internet is a multi-service network in two important respects: it supports multiple types of applications on the one hand, and multiple communications technologies on the other: We can run video streaming, messaging, web surfing, voice calling, and video conferencing over Wi-Fi, 4G/LTE, DSL, cable modem and fiber pretty much without regard for how the applications relate to the technologies and vice-versa. We can also use these combinations of applications and technologies to communicate with others who use different technologies as long as our applications are compatible. And we do.

Applications have Different Needs

Applications generally have different needs from networks: voice needs very little communication bandwidth (less than 100 kilobits per second) but also low latency and low packet loss because it doesn’t have time to retransmit lost packets. Video streaming wants much more bandwidth – 4 Mbps for HD streams and about 12 for 4K streams – but it’s less particular about latency and loss because it buffers packets at the receiving end. You can lose a video streaming connection and still play video for several seconds, as a matter of fact.

In packet-switched networks, applications request resources simply by sending packets in the general case, although there are exceptions, as we’ll get to shortly. More specifically, applications place outbound packets in memory buffers from whence they are placed on the network as soon as the network is able to accept them. Once on the network, they reach their destinations as quickly as possible after being switched across several data links of different capacities and states of congestion along the way.

At each switching point, a piece of network equipment known as a router makes policy decisions about which packets to discard, where to send each remaining packet next, and whether to bill anyone for transmitted packets. We can judge these decisions according the consequences each generates, to borrow Professor Epstein’s phrase.

Decisions, Decisions

For some applications, the discard decision is the most critical of the bunch because, like the death penalty, it’s irreversible (for them.) For a Skype or other Voice over IP call, once a packet is gone it’s gone and there’s no way to re-send it. This is not the case for video streaming, web surfing, Twitter, or email because all the packets these applications generate are parts of TCP streams that retransmit lost packets automatically. Retransmission adds a little bit of delay to TCP applications, but not so much that the user generally notices. This is because networks are typically faster than video servers and web servers: the typical US wired broadband connection is at least twice as fast as the typical web server, for example.

Networks have to discard packets more frequently than they might in order to support the congestion control logic designed-into TCP since the late 1980s. While routers have the ability to place millions of packets in memory buffers or queues before forwarding them to the next router, deep buffers are a sign of congestion, an indication that senders need to reduce their rate because network resources are effectively exhausted. So a packet discard is like the busy signal on the telephone network.

Very full buffers are a commonplace occurrence in the specialized head end routers that serve the “last mile” between the Internet Service Provider and the consumer for a couple of reasons. First, the communication pipes that feed these head end routers (technically known as DSLAMs, CMTSs, cell towers, or a few other names) are fatter than the pipes that empty their internal buffers to the customer premises. In a cable network, the pipe down to the CMTS may be a gigabit or so, while the connecting pipe is generally less than 100 Mbps. So even a few users loading web pages at the same time can fill the buffers in a CMTS to the point that it has to drop packets to signal the web server to slow down. The individual web servers top out on average at 15 Mbps, but there are many users for each CMTS, sometimes as many as 400.

Video Block Sending

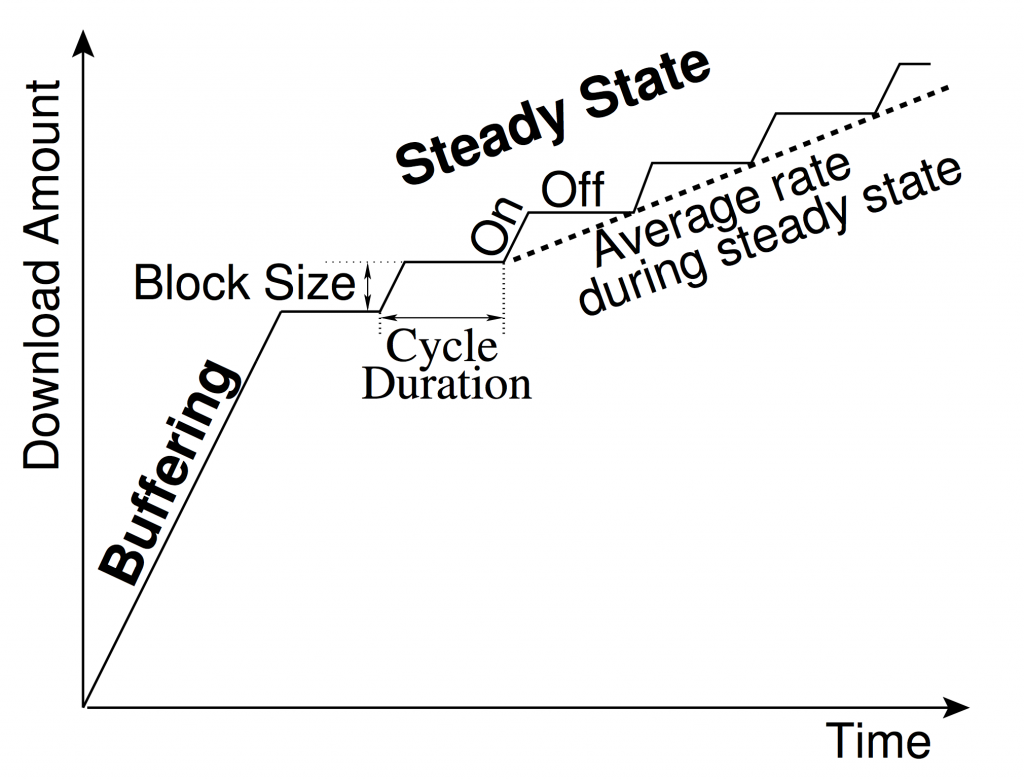

The second reason for full head end buffers is more important: In order to make the best use of its storage resources, a video server sends movie packets in clumps of hundreds of packets at a time punctuated by several seconds of silence. This technique, known as “block sending” in the literature, creates congestion for other applications trying to reach the same destination when a block of video streaming packets is in progress.

Rao et al., “Network Characteristics of Video Streaming Traffic,” ACM CoNEXT 2011, December 6–9 2011, Tokyo, Japan. Copyright 2011 ACM 978-1-4503-1041-3/11/0012

These blocks of packets can last for several seconds, but the “on” interval is always less than the “off” interval. When blocks of video packets are forwarded to the customer, they don’t play out immediately; rather, they occupy space in a buffers in the end user’s equipment (a TiVo, Amazon Fire TV stick, Apple TV or the like) until they’re needed on the final screen. So the ISP is forced to deal with the fact that packets are parts of streams whether they want to or not.

What Would You Do?

So what policy would you use to judge which packets should be discarded, which are to forwarded, and in which order they are to be forwarded if you had control of the CMTS router that serves your home or office?

One approach would be to simply forward each packet you get in the order received until you reach some limit on your buffering and then drop all the new packets until your buffer were less full. This approach has been advocated by a number of advocates as the most “neutral” technique and as one that doesn’t violate their sense of network privacy.

While it may be neutral (in some sense of the word,) it’s not actually fair as most of us understand that word. Imagine a scenario in which the buffer is entirely full of a portion of a video streaming block and a Skype packet comes in. You know the buffered packets are parts of a video block because of their source, destination, protocol (HTTP over TCP) and volume. You know the Skype packet is not associated with the buffered packets because its source, destination, protocol, and length (300 bytes vs. 1500 hundred for the video block) are different.

Would you discard the Skype packet, knowing that once it’s lost it’s gone forever, or would you discard one of the video packets to give the Skype packet a place in the queue of packets going to the end user?

The Benefits of Stream Awareness

If your traffic management system is aware of streams and not merely the packets that compose them, it’s an easy choice: the Skype packet moves to the head of the line and one of the video packets bites the dust. In fact, your traffic management system probably maintains a group of queues for each end user such that the voice streams have their own queue reserved for their exclusive use, where that queue has priority over the video streaming queue. And just for good measure, you may have additional queues for video conferencing and web surfing that have higher priority than the video streaming queue as well.

ISPs are motivated to manage streams this way because their final goal is customer satisfaction. The streaming application only needs the “average rate during steady state” in the diagram above, so any allocation of resources in excess of this average rate is counter-productive, an over-allocation of communication resources that fails to produce an increase in the user’s perception of quality while causing harm to other applications.

So this is why it’s important to think about the Internet in terms of streams rather than packets. The Internet is meant to be a multi-service network, and to achieve this goal it has to differentiate streams according to their nature, purpose, and performance requirements. The failure to manage it this way leads to excessive cost and sub-optimal performance, the usual consequences of poor resource management.

Judging from the consequences, stream-aware management is the way to go. In a subsequent post, we will examine the objections to stream-aware management in order to learn why it has become controversial.