Reports from NAB and CTIA Address Efficient Use of Spectrum

Recent contributions to the mobile broadband spectrum debate are reports from NAB and CTIA. Before reading them I envisioned writing a “dueling reports” piece, but they mostly complement each other. Below I walk through the main points, adding some of my own views.

NAB: Shortages of Capacity, Not Spectrum

The NAB report is prepared by Uzoma Onyeije, a consultant who was once Broadband Legal Advisor to the Chief of the FCC’s Wireless Telecommunications Bureau. The main claims are that there is no need for an urgent and massive reallocation of spectrum, that there are numerous alternatives to spectrum that can boost network capacity, and that sources of spectrum other than TV are more readily available.

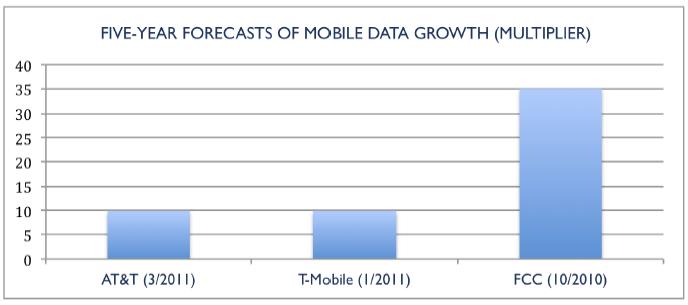

It starts by noting there wasn’t a “spectrum crisis” until the American Recovery and Reinvestment Act of 2009, which required the FCC to promote broadband access. The National Broadband Plan followed calling for 500 MHz of spectrum to be made available for broadband within 10 years, with 300 MHz of that for mobile within five years, and 120 MHz of that to come from television broadcasting. Seven months after concluding that 300 MHz was needed in the short term, the FCC released a Technical Paper intended to support the 300 MHz figure. In November I discussed that Paper, pointing out several factors not considered that, had they been, would have acted to reduce the estimate of short-term spectrum requirements. Later, I questioned the appropriateness of the FCC relying on a forecast prepared by the marketing department of an equipment vendor, without critically and openly examining the assumptions that went into the forecast. Onyeije shares some of the concerns I had, and still do, with that Paper.

I expect the American Recovery and Reinvestment Act turned some spectrum wants into needs, but by all accounts mobile network data volumes are increasing significantly, fed by a volatile mixture of old flat-rate plans and new bandwidth-hungry devices, though the growth rate of those data volumes is decreasing. Getting additional spectrum is a natural option to consider for more capacity. Onyeije provides a list of non-spectrum options. Some have been mentioned here before – offloading to Wi-Fi and other technologies, adjusting rate plans so largest data users pay more, tighter software coding of applications and operating systems. I don’t think I’ve discussed channel bonding, which is a technique that uses non-contiguous spectrum – combining a sliver here and a sliver there. Support for this will be appearing in LTE-Advanced, going on the air in a few years. Backhaul is also something I haven’t focused on; how much of the current spectrum crunch is really due to backhaul bottlenecks?

Another capacity-increasing technique mentioned is sectorization – changing a non-directional transmission system to a directional one using two or more sectors at the same cell site. In the most congested urban areas in the US, antenna systems are generally configured with three sectors, with each sector 120 degrees wide. You don’t see many six-sector configurations, in which each sector is 60 degrees wide. Theoretically, that doubles capacity from the same tower. A long time ago there was more activity in six-sector antenna systems, but the sense then was it wasn’t too practical; you might end up with just a 70% capacity increase because of real-world issues such as imperfect antenna patterns. It was hard to justify the expense. These days, however, with better transmission technology, it should be looked at again. I note SK Telecom in Korea is deploying 500 six-sector sites, after good results with 20 test sites.

Onyeije looks at the spectrum warehousing issue. If an operator has spectrum that isn’t being used, but is on track to build it out, I’m fine if it is fallow for a year or so. Maybe longer if the delay is to wait for much more efficient transmission technology that is on track in the standards process. If it is just sitting there with no build-out requirement and no prospect for utilization, I’d think the operator’s investors would create pressure to sell it. If a spectrum holder has “no plans to sell, lease or use” its spectrum, to quote one in Onyeije’s report, I’m more concerned.

Aside from the warehousing issue, Onyeije identifies a few bands that have been languishing at the FCC for years and makes the point that, since they have been idle for so long, the spectrum crisis must not be so great. These are the AWS-3 block spectrum at 2155-2175 MHz, H block spectrum at 1915-1920 MHz and 1995-2000 MHz and J block spectrum at 2020-2025 MHz and 2175-2180 MHz, 700 MHz D block at 758-763 MHz and 788-793 MHz. What’s the story there?

Onyeije suggests mandatory receiver standards. Receivers are already very good in mobile broadband because of vendor competition and the need to operate in a congested environment. Receiver design is proprietary and an important source of differentiation among vendors. I’d think continued improvement of receiver performance in the marketplace, in the long run, would achieve greater capacity benefit than imposed government standards.

The report calls on the FCC to complete and publicly release a comprehensive spectrum inventory, along the lines of the Snowe-Kerry RADIOS Act, which includes measurements. The FCC has made available several spectrum tools online, including LicenseView and the Spectrum Dashboard, which it says is its inventory. According to their disclaimers however, LicenseView “is not intended for analysis of spectrum utilization or spectrum holdings of licensees” and “the FCC makes no representations regarding the accuracy or completeness of the information maintained in the Spectrum Dashboard.” Regarding federal spectrum, I’d add that an inventory becomes more important in light of GAO’s report on NTIA processes that said “NTIA cannot ensure that spectrum is being used efficiently by federal agencies” in part because “NTIA’s data collection processes lack accuracy controls and do not provide assurance that data are being accurately reported by agencies.” Thus, “it is unclear whether important decisions regarding current and future spectrum needs are based on reliable data.”

CTIA , CEA, and WCAI dismiss the NAB report, saying it’s a stalling tactic and they know these things already. One of Onyeije’s points, however, is that it’s the Commission that needs to know these things, and fully investigate and quantify the impact of all capacity-generating alternatives. It has not. It tried with the Technical Report, but inadequately.

CTIA Establishes the Efficient Properties of Cellularization

The CTIA report is intended to demonstrate that US mobile wireless providers are “extremely efficient” in their use of spectrum. The report was prepared by Peter Rysavy, a consultant known in wireless circles for his series of technical reports, with many pertaining to spectrum, air-interface, and mobile device issues.

This report seems to be a response to an NAB claim, some time back, that broadcasting is a more efficient user of spectrum than wireless. I presume NAB’s claim is based on broadcasters’ DTV system transmitting about 19 Mbps in a 6 MHz bandwidth, while the wireless operators are sending about 10 Mbps in 10 MHz bandwidth. (So, TV has more bits per Hertz.) This is kind of an apples and oranges comparison, but the comparison has been made and we have this report in response. Having spent a lot of time in 3G and 4G standards battles, I have no doubt that those participating are trying wring out all the efficiency that is both possible and practical. Wireless standards groups sweat to get another tenth of a dB improvement. Of course, part of efficiency is an implementation issue and not covered by standards. I agree cellular services are more efficient at delivering unicast traffic. Broadcasters, however, can be more efficient in another way. The efficiency debate occurs in part because we have not agreed on a definition of efficiency. More on this below.

The CTIA paper starts with a section on spectral efficiency. It discusses its fundamental measures and technologies that have been used to continually improve it, including adaptive modulation and coding. (Rysavy says his list of technologies is not exhaustive, but to his list I’d add Hybrid Automatic Repeat Request as a key enabler.)

Rysavy observes that the industry’s technologies are operating close to the Shannon Bound, the theoretical limit on the spectrum efficiency that can be had for a given signal-to-noise ratio. Capacity improvements thus must come from advanced antenna techniques (such as MIMO) and topology evolution (e.g., adding picocells to a macrocell).

The report is hopeful on the prospects for Wi-Fi and femtocells to relieve traffic on the macro-cellular network. I’m somewhat more cautious on the potential of femtocells to relieve the capacity crunch. For various reasons, including interference management, what I think may happen with femtocells is that they get pulled out of the home and put up in neighborhoods using existing structures for support. (The more-favorable pole-attachment rules recently adopted by the FCC are timely.) There are many small-cell trials underway but I haven’t seen much in the way of results.

Network evolution is discussed in a larger sense, focusing on developments in heterogeneous networks, but Rysavy says that’s not enough and that more spectrum is needed, too.

Back to the efficiency issue, the efficiency of cellular systems is compared to that of broadcast television. The point made is that if you take many small cells and place them within a larger area covered by one transmitter (e.g., one for TV), the cellular system can deliver many times the unique bits in that area. This is true, if that is the definition of efficiency. Let’s look at it another way and compare the maximum number of users served by each scheme. As a best-case scenario, assume the cellular users are using a low-bit-rate application such as LTE VoIP. In 10 MHz we can support about 400 users. That times 3 sectors is 1,200 users per cell. That times 30 cells (as per the example in the paper) is 36,000 users that can be supported at once. In contrast, a TV station covering the same area can support an unlimited number of users, albeit one-way, since it isn’t limited by uplink capacity nor MAC addresses. Is it a fair comparison? No. One is broadcasting and the other is cellular. Can’t cellular broadcast also? Yes, but to the extent it does the unique-bits argument becomes weaker. We can go around and around. The television example is used, along with other analysis in that section, in an attempt to persuade the reader that “cellular architectures represent a configuration that is capable of providing tremendous service capacity to its users.” I’m convinced, but I was before reading the report.

Rysavy depicts how voice minutes, message volume, and data volume have increased on cellular networks over the years. Yesm growth has been dramatic, but the growth rate is slowing.

Epilogue

Concurrent with this debate, ATSC is in the early stages of planning and developing the second DTV standard to replace the current one that’s been around for about 15 years. LTE specifications support broadcasting, which can be done in a cellular manner on the same frequency. Transmissions are synchronized so the terminal can combine energy from multiple sites. The broadcasters looked at cellularization a year ago assuming use of the current ATSC DTV standard, and rightly found it was not practical. It just wasn’t designed for that purpose. With the new LTE standards, it’s time to look at TV cellularization again but with LTE as a core technology. There could be a return path, inexpensive chips for receivers, and it might be able to be done in less than 100 MHz, making over 200 MHz available for auction. With DTV, the broadcasters found significant deployment and operating costs with cellularization, but with LTE infrastructure would be shared; it remains to be determined if it’s a business. The technology is there; it just has to be architected by broadcasters and infrastructure vendors into suitable form.

[cross-posted from Steve Crowley’s blog]

Some issues:

What’s the source for the claim that spectrum demand is declining? What I’ve seen says that Android users and iPad users consume more bandwidth that iPhone users, so I can’t see any basis for that claim.

Second one is that sectorization is a means of using more spectrum, not a more efficient use of existing spectrum. The more sectors you have, the more overlap that has to be disambiguated with codes or frequencies. Korean mobile has a lot more spectrum allocated than US mobile hence it can be more highly sectored. It’s a shame that radio waves don’t know Cartesian geometry, things would be better if they did.

Third, the Cisco forecasts are not prepared by marketing people with gear to sell, they’re based on measurements of actual traffic and are historically conservative. Cisco does not sell cellular base stations in any case; in fact, their wireless product line is limited to Wi-Fi and the product managers for that stuff tend to be former engineers.

There is no claim in my piece that spectrum demand is declining. You may be referring to my reference to “growth rate” of data volumes declining. One basis would be Cisco’s VNI report, Figure 1. Year to year, the percentage of growth declines.

I disagree on sectorization, and think it can be a more efficient means of using existing spectrum. In LTE, in a typical, simplest, deployment, all sectors in an area will be transmitting on the same frequency band. The frequency reuse factor is one. SNR will be lowest, and data rates lowest, at the cell boundaries. In non-simplest deployments, there are ways to schedule OFDM subcarriers in time and frequency to improve cell edge performance at the cost of capacity. I think most LTE deployments in the US are keeping the three-sector topology, so sectorization doesn’t seem to be much of a problem for LTE so far.

You say that Cisco forecasts are not prepared by marketing people with gear to sell, that’s refuted by Cisco itself in the first 10 seconds of this video:

http://www.youtube.com/watch?v=dR-qfs0bX-k

Here’s an example web page in which Cisco suggests its equipment for demand it forecasts: http://www.cisco.com/en/US/netsol/ns1096/networking_solutions_solution.html

It’s a conflict of interest as any vendor has when preparing sales literature. It’s not wrong. For the FCC to rely on such literature as the basis of spectrum policy, however, I find inappropriate unless it takes various safeguards I have suggested in the past, at a minimum. If we’re going to use one vendor’s own forecasts, let’s open it up to all vendors, and not select three forecasts that happen to support a conclusion made seven months earlier.

Regarding the role of actual traffic, that seems to be secondary. According to Cisco, “The forecast relies on analyst projections for Internet users, broadband connections, video subscribers, mobile connections, and Internet application adoption. Our trusted analyst forecasts come from Kagan, Ovum, Informa, IDC, Gartner, ABI, AMI, Screen Digest, Parks Associates, Pyramid, and a variety of other sources. Cisco also collects traffic data directly from a number of our service provider customers, and this data is used to validate and adjust the usage assumptions underlying the forecast model.”

So, the Cisco forecast relies primarily on forecasts, that today are varying up to two orders of magnitude. Select service provider data is used to “adjust” assumptions. What assumptions.

As to the forecasts being historically conservative, I tried to find the older forecasts on Cisco’s web site to see by how much, but only find last years. If they are archived somewhere I’d like to see them.

Yes, Cisco doesn’t sell cellular base stations but it sells core network hardware to mobile broadband operators. Thus, if we are going to rely on forecasts prepared by vendors, let’s include the ones that develop base stations, and better understand the air interface that is a key constraint on mobile broadband capacity today.

The FCC issued a Public Notice today seeking comment on using the 2 GHz bands identified as “languishing” by NAB. Some of them are listed above. 75 MHz total.

http://transition.fcc.gov/Daily_Releases/Daily_Business/2011/db0520/DA-11-929A1.pdf