The Driverless Uber of Networking

If the future of personal transportation is driverless Uber, it’s probably not a great idea to build a four car garage unless you’re hankering for woodshop. Even if driverless cars are overhyped, it’s prudent to start looking at transitioning away from traditional cars and looking into electrics at some point. They may only be a complement to big, comfy SUVs, but high tech cars have a number of virtues. We don’t look always look at broadband networks with an eye to the future, however.

Network Quality and Cost

Networks are all about quality and cost. Discussions about broadband quality generally devolve to capacity (or “speed” in policy shorthand) pretty quickly. Capacity doesn’t matter as much as reliability, consistency, and security, but it’s the easiest thing to measure. So we can make meaningful distinctions between broadband plans and policies using capacity as a starting point as long as we understand it’s only a proxy for the more subtle characteristics of a broadband connection. Unfortunately, there are different ways to measure speed: there are peaks, valleys, averages, peak-to-average ratios, and all sorts of other ways to look at it. The Internet is a shared resource, statistical system where performance is expected to vary with load, but that’s not really appreciated outside of network engineering circles.

Some advocates, some journalists, and most journalist/advocates continue to make speed comparisons in a misleading manner. The Electronic Frontier Foundation (EFF) was once the Internet’s privacy hawk, but has since become an advocate for the erosion of copyright. In a recent article on municipal broadband, EFF asserted that the average broadband speed in the US was a mere 9.8 Mbps, a very peculiar number that I’ve not seen for a long time. It’s reasonable to claim US broadband speed averages 55 Mbps – 67 Mbps. The first is the Speedtest.net estimate and the latter is the Akamai observation of “Average Peak Connection Speed” of broadband networks.

The Network Freeway

It’s wrong, but understandable, for unskilled journalists to claim the US average is as low as 15.3 Mbps by misinterpreting Akamai’s “Average Connection Speed” as a measure of a broadband pipe that carries multiple connections at the same time. This is like saying I-70 is a 65 MPH freeway because each of its four lanes is limited to that speed. But the freeway capacity is 4 x 65 MPH because its capacity is the sum of the capacities of its lanes.This only matters when people compare the quality of today’s networks to some future goal or standard, such as gigabit networks.

But when skilled advocates like EFF’s Corynne McSherry reach back to some prior era to pull out a tiny number to stand in for today’s networks, there seems to be an intent to deceive; but it could just be sloppiness. An upbeat report by Viavi Solutions says the global average broadband speed is a mere 6.3 Mbps, but that’s not true either. This is Akamai’s “per lane” speed, not their true broadband speed, which is 34.7 Mbps (Akamai State of the Internet Report, Q1 2016, page 3.)

The Rosy Glow of Network Quality

It’s amusing that Viavi used the wrong figure in a report that lights the US with a rosy glow. Unlike the gloom and doom that EFF invoked to justify the takeover of local networks by city governments, Viavi asserts that 61% of the world’s gigabit deployments are inside the US. While deployments can be either large or small, that’s still an impressive figure that puts us in the gold medal position, well ahead of the pack:

North America has the largest share of announced gigabit deployments, with 61 percent. Europe is second with 24 percent. Asia, Australasia, Middle East, Africa and South America share the remaining 15 percent of deployments.

Gigabit Doesn’t Mean Fiber

Perhaps the most significant news from the Viavi report is the revelation that gigabit networks don’t need to be exclusively fiber optic:

- Fiber dominates: 85 percent of currently known gigabit deployments are based on optical fiber connectivity. 11 percent are based on Hybrid-fiber coax (HFC), a broadband technology that combines optical fiber and coaxial cable, commonly employed by cable providers.

- Wireless gigabit is already here: Nearly 3 percent of known gigabit deployments are based on LTE-A, a modified version of LTE which is gigabit-capable.

- 5G is coming fast: 37 wireless carriers have announced plans for 5G networks. Five of them plan to have 5G networks built as early as 2017.

So we can reach the magic gigabit threshold in a variety of ways.

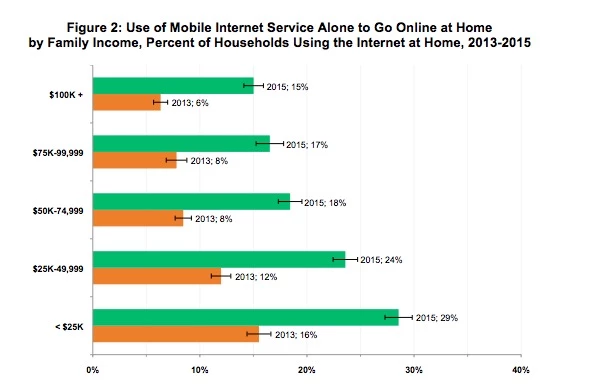

Rich Cut the Broadband Cord

But we don’t really need wired gigabit networks today, as we see from the intriguing Census Bureau data on broadband cord-cutting. The Washington Post’s Brian Fung summarizes the Census Bureau report:

For the most part, America’s Internet-usage trends can be summed up in a few phrases. The Internet is now so common as to be a commodity; the rich have better Internet than the poor; more whites have Internet than do people of color; and, compared with low-income minorities, affluent whites are more likely to have fixed, wired Internet connections to their homes.

But it may be time to put an asterisk on that last point, according to new data on a sample of 53,000 Americans. In fact, Americans as a whole are becoming less likely to have residential broadband, the figures show: They’re abandoning their wired Internet for a mobile-data-only diet — and if the trend continues, it could reflect a huge shift in the way we experience the Web.

Going Forward While Going Backward

So here’s the oddity: The US is leading the world in ultra-high-speed broadband network deployments at a time when the world’s broadband use is shifting from wired networks to wireless ones. This is a global trend that actually started in nations with all-fiber networks such as South Korea and Japan. Meanwhile, some advocates of community broadband still say we aren’t building high capacity wired networks as fast as we should. But others are waking up to the reality that wireless networks have more overall utility than wired ones, and lower costs to build as well:

You can deliver hundreds of megabits, even a gig, through fixed wireless into urban and rural areas. The economics of wireless as well as the ability to deliver a gig makes the case for wireless/wired hybrid infrastructure — and Google is in the game.

Thank You Google

So we can thank Google for carrying this message to the municipal broadband subculture by exploring the wireless option. This came about when Google decided to delay its Silicon Valley fiber project:

Google has told at least two Silicon Valley cities that it is putting plans to provide lightning-fast fiber internet service on hold while the company explores a cheaper alternative.

The news comes nearly three months after San Jose officials approved a major construction plan to bring Google Fiber to the city. Mountain View and Palo Alto also were working with Google to get fiber internet service but said Monday that the company told them the project has been delayed.

“It was a surprise,” said Mountain View public works director Mike Fuller, who added that Google told city officials the company was still committed to providing fiber service in Mountain View. “We didn’t expect it because we were working on what was their plan at the time.”

This is what a major recalibration looks like. Google was extremely enthusiastic about fiber to the home until it wasn’t. And now it isn’t.

It’s the Servers, Stupid

Jeff Hecht, a journalist who have made a career out of covering developments in fiber optic networking, challenges networking companies to lay more fiber and researchers to develop means of making fiber more efficient. While these things are important, they’re not crucial to the realities we face today. Hecht’s argument revolves around the streaming meltdown HBO’s Game of Thrones faced earlier this year:

On 19 June, several hundred thousand US fans of the television drama Game of Thrones went online to watch an eagerly awaited episode — and triggered a partial failure in the channel’s streaming service. Some 15,000 customers were left to rage at blank screens for more than an hour.

The channel, HBO, apologized and promised to avoid a repeat. But the incident was just one particularly public example of an increasingly urgent problem: with global Internet traffic growing by an estimated 22% per year, the demand for bandwidth is fast outstripping providers’ best efforts to supply it.

Sorry, but the GoT meltdown had nothing to do with bandwidth. HBO’s problem was traced back to the video servers at MLB Advanced Media that had the contract to deliver HBO’s video streams. Users of other services were not affected:

Users streaming Game of Thrones on two other OTT outlets — its TV Everywhere component, HBO Go, and its linear channel on Sling TV — did not report streaming issues during the episode.

HBO Now’s periodic outages notwithstanding, spikes in traffic demand are a continued problem with many popular services. Sling TV suffered outages during key March Madness games, sparking similar outrage from sports fans.

The issue was corrected in less than an hour, which proves it didn’t come about from insufficient fiber. MLBAM didn’t go out and lay more fiber optic cable to correct their problem, they turned on more video servers. So this wasn’t so much a dearth of network bandwidth as a shortage of computation resources by the people who bring me my baseball games over the Internet.

While regular upgrades to the Internet’s fiber backbone are necessary, they’re also routine. And no, the “world wide wait” of the 1990s was not the fault of networks as Hecht claims, it was caused by a bad design choice in the first version of the Web protocol that was corrected in the 1.1 release of HTTP.

Going Faster in the Wrong Direction

As long as policy priorities are set by people who don’t understand user preferences and technical realities, there will be pressures to enact counter-productive policies. Perhaps the most stark example of this trend is the “network compact” idea promoted by FCC Chairman Tom Wheeler. He reiterated this notion in a closed-door meeting sponsored by the Aspen Institute on Sunday:

“For the past almost eight years, the FCC has sought to confront network change head-on; to harness the network revolution to encourage economic growth, while standing with those who use the network as consumers and innovators,” he said.

Wheeler used his familiar refrain about the “network compact,” which he calls “the responsibilities of those who build and operate networks.” He focused on the need for interconnection, which he characterized as “access on broadband networks” not just “access to” the networks.

“The ability to interconnect networks becomes crucial when the most important network of our day, the Internet, is but a collection of interconnected networks.”

Wheeler’s “network compact” is an attempt to make the terms of the 1913 Kingsbury Commitment a permanent cornerstone of broadband policy, but it doesn’t fit today’s realities. Kingsbury was a consent agreement that allowed the old AT&T Bell System permission to hold a monopoly over US telephone service in return for some give-backs such as universal phone service. If we adopted this framework to today’s mobile networks there would only be one network, and it would be managed by the FCC in a way to made progress as slow as possible. Instead of looking forward to 5G, we would probably be considering whether we could replace out analog mobile network with a digital one.

Kingsbury also used the monopoly carrot to wring favors from the Bell System that it would not otherwise have offered. Because the FCC has no such carrot today, it needs to look for other incentives, such as cash subsidies, to promote those of its goals that don’t have market support.

Taking Stock

So it’s probably better to take stock of where we are: a nation with multiple mobile networks that are constantly improving to such an extent that they’re actually replacing wired networks for residential broadband service. The fact that wireless networks don’t have as much capacity as fiber doesn’t really matter as long as they have enough to get the job done with room to grow. But we can’t digest that fact until we’re willing to recognize the facts of engineering and consumer preference in today’s reality.

Will Mr. Wheeler listen to what consumers are saying or simply continue to wrap himself up in the comfort of his quaint historical homilies? And if he does wake up to the modern realities of Internet life, can he bring his public interest pals along? We shall see what we shall see, but it looks like the FCC is still pushing us in the direction of four car garages.

Richard,

It would appear that interconnection leading to greater ecosystem network effect is beyond your comprehension or desire.

Such a shame,

Michael

You mean the interconnection that was happening 20 years before Tom Wheeler knew there was an Internet?

Tom has been aware of the benefits of interconnection and cooperation long before you Richard. I have a funny story for you about SMS interoperability when you have a moment. But he knew about it in the earliest days of cable, too.

I am talking about the interconnection the Bell System DID NOT want in Kingsbury. And yes, the interconnection that was mandated in 1984, revoked in 2002-2005, and then single-handedly resurrected by Steve Jobs in 2007. That type of interconnection. And there are many more examples, both mandated and market driven, that have proven the benefit to new entrants and incumbents alike.

But as I said, you’re not comprehending or really wanting to see that happen.

Cheers,

Michael

Michael, it’s good to see that your smart-ass tone hasn’t changed. Wheeler left NCTA before there was a cable modem and left CTIA before there was an iPhone. Most of what we take for granted in today’s Internet appears to be outside his scope. But if you can prove me wrong, I’d be happy to see your evidence.

Richard, you deserve it! Because you really are ignorant of the interconnections about which I speak. Such things as pole attachments, number portability, sms interworking are interconnections. Tom had a hand in all of those. And they were extremely generative and saved the service providers he represented from bankruptcy; incumbent and new entrant alike. As I said there are many, many more examples. Really wish you would learn. Do the world and your clients a lot of good to help them out of their silos.

None of those are what we mean when we talk about interconnection in the Internet context. Many are interesting in their own right, but they aren’t interconnection.

Richard, cause and effect is an enormously powerful force in the informational stack. Viewing each boundary and layer discretely is a mistake. The free “internet” interconnection occurred because WAN prices had become commoditized by the early 1990s even as data was still a pimple on the voice elephant’s behind. Voice interconnection (aka equal access) was the biggest factor in the commercial scaling of the internet. So in a market of “trusted” peers, who cared about settlements. Well, we now are seeing the outcome of that thinking: silos everywhere and every actor for themselves. Far from generative and open system the “internet” evangelists touted in the 1990s.

So when a butterfly flaps its wings in China, a hurricane forms in the Gulf of Mexico and Tom Wheeler made it all happen?

Don’t be absurd. I’m merely trying to school you as to what constitutes interconnection. Your criticisms of his background and accomplishments show your ignorance.

So far you haven’t touched the topic of interconnection. Let’s start with Internet Exchanges. There used to be something called NAPs, set up by Al Gore to be controlled by carriers and to take over from NSFNet. Then there were MAEs and carrier-neutral IXs. The first carrier-neutral IX is PAIX in Palo Alto. What street is it on?

I referenced those earlier. Data traffic was still relatively small back then if you remember. I started analyzing the IXCs in 1990 and was writing quite a bit about data networks and nascent wireless networks. So I think I’ve got my facts straight. But you have clearly missed my point that interconnection exists across many layers and boundary points; not just at NAPs.

Kingsbury was all about pushing the WAN/MAN interconnection point out 50 miles. Why? Because even back then they knew what wireless could be capable of. That’s where we took the wrong fork in the road and only got back on in 1984.

Thanks for sharing.

The average can be really high if you exclude the customers locked into dialup and those on dsl and then others with speeds less than 50 meg, plus ignore the tens of millions who can’t afford $100 plus per month.

Hey, I’m sure Trump thinks poverty means earning only $250,000 per year.

The average broadband plan costs about $45/month, and there are certainly many that are a lot cheaper. Dial-up accounts for less than 3% of connected homes in the US, and most of those could use some form of broadband if they wanted. In most cases, DSL can go up to 40 Mbps or higher. In real dollar terms, broadband costs about as much, if not less, than telephone service did at the time of the Bell System breakup.

I don’t know what any of this has to do with Trump.