Wireless ISPs Manage Video Streams Under High Load

Network management researchers at Northeastern University and University of Massachusetts, Amherst believe they can detect certain forms of network management. According to their blog, they’ve collected data on instances of video stream management on 25 different wireless networks in the US and elsewhere.

Their data is incomplete, but it could be reasonably valid. Wireless carriers disclose the fact that they reduce the rates and resolutions of video streams under congestion conditions, which is what the data appear to show.

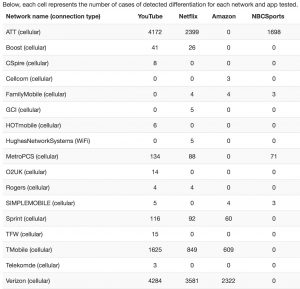

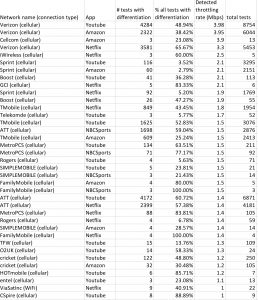

The researchers (led by David Choffnes, a longtime student of BitTorrent management) report data that show 5% of the nearly half million streams generated with their test program were throttled. They break out some throttling percentages by application and carrier, which is good.

Full Disclosure

Smaller networks show the greatest percentage of throttled streams: the top five frequencies range from 88 to 100%. Major carriers Verizon, AT&T, and T-Mobile are believed to manage video from half to two-thirds of the time, and Sprint manages very infrequently. Verizon’s “throttled” speed is 4 Mbps, which is not really throttling at all.

On its face, the study simply shows that carriers do what they tell customers they’ll do: reduce video streaming bandwidth under load to allow other applications to work well. So the stories about the Choffnes research that claim it shows net neutrality violations aren’t entirely true.

The defunct Title II order allowed reasonable network management and required disclosure of management practices. And indeed, these practices are disclosed by Verizon, AT&T, T-Mobile, and others.

Subtle Changes

Most mobile users who experience video steam management don’t even notice it. As Bloomberg puts it:

Terms-of-service agreements tell customers when speeds will be slowed, like when they exceed data allotments. And people probably don’t notice because the video still streams at DVD quality levels.

This is because video streamers don’t always reduce resolution for small-screen devices. They should, because these devices are generally attached to the most technically constrained networks. It’s easy to add capacity to wired networks, but 4G wireless depends on licenses.

Converting Research to Clickbait

Because the researchers don’t show proportions of throttled vs. non-throttled streams on their front page (click the “detailed results” button to see them), some have lept to the conclusion that YouTube and Netflix have been singled out for discrimination.

The Bloomberg headline is “YouTube, Netflix Videos Found to Be Slowed by Wireless Carriers“; Mobile Marketer’s is “Study: YouTube, Netflix videos slowed by wireless carriers“; The Verge says: “Netflix and YouTube are most throttled mobile apps by US carriers, new study says“.

What these headlines lack in originality, they make up in consistency. But as the graphic shows, Wehe actually claims that many other video streams are throttled as well.

Video and Congestion

The common denominator is actually video and congestion, not Netflix and YouTube. It just so happens that there’s a video duopoly in the US, so any study of video management by source is naturally going to rank them highest.

So the articles could just as easily said “YouTube and Netflix dominate US market for video streaming”. But that doesn’t attract eyeballs because it’s something everyone knows.

Part of the blame for the confusion over Choffnes’ findings comes down to the researcher himself: he failed to show proportions prominently, only reporting raw data clearly. This omission leads to the clickbait headlines by bloggers who were apparently too lazy to click one button for details.

Company Clarifications

Because reporters chose to go the clickbait route, the companies accused of singling out YouTube and Netflix for abuse had to issue denials. Verizon had to say: “We do not automatically throttle any customers;” AT&T was forced to assert: “…unequivocally we are not selectively throttling by what property it is.” The detailed data show these representation are true.

These statements are consistent with the data, but only necessary because of its consistent misinterpretation. This says a lot about how tech policy blogs are missing the boat.

While it’s true that tech issues can be hard to understand, the data reported by the Wehe website are not that complicated. But advertising-supported media always prioritizes eyeballs over content quality.

First, Do Not Mislead

Choffnes misled the bloggers by withholding some data and making other data hard to find. He didn’t disclose the number of unmanaged YouTube and Netflix streams his application tested, for example. Putting the data into a spreadsheet, I find 22,525 managed streams out of 447,791 total steams.

His method is also under-inclusive. Rather than measuring real video streams, Wehe measures the performance of simulated video streams. So one potential source of error is the accuracy of the simulation.

Unlike approaches taken by other researchers, Choffnes creates synthetic video streams by embedding identifiers in packets filled with random data. These synthetic streams are not recognized as video streams by all ISPs.

Improving the Test

So it would be good for the researchers to disclose full data and to develop more faithful simulations. The best approach is to measure real streams, but there is potential error in that approach because it’s test system resource dependent.

It would also be useful to know whether differentiation takes place at all times or only under congestion conditions. Choffnes says he’s interested in doing that, but I see no evidence that he actually has.

Examination of prevailing conditions would be useful. In fact, they’re the minimal disclosures necessary for making meaningful inferences from such a dataset. The dataset also needs about ten times as many observations: the best studied example is YouTube over Verizon with only 8,754 tests and some networks have as few as three.

Raising Observations to the Status of Science

Scientists don’t collect data for the fun of it, we do it to answer questions and validate hypotheses and conjectures. In this instance, the hypothesis is: “ISPs sometimes slow down video streams,” and the observations confirm it.

While this is one of the basic questions in the field of network management detection, it’s not one of the key ones. Regulators are interested in knowing whether management practices conform to disclosures and to regulations generally, but this is a hard area of study.

The Wehe data doesn’t tell us whether the observed behavior is consistent with company disclosures or general net neutrality conventions. While we don’t expect legal opinions from network performance scholars, it’s important to know more about the triggers of network management.

To make these observations worthy of consideration by policy makers, they need to be much more refined. Bloggers will still clickbait the story, but more meat in the dataset limits the damage they can do.