The Trouble with Spectrum

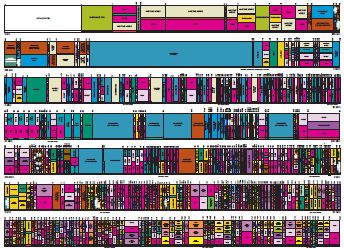

You can get a good idea of the problem with America’s current spectrum situation from the NTIA Spectrum Map (click through for a full-size picture.)

Since the initial allocations were made back at the beginning of time (1934) until quite recently, the thinking behind spectrum allocations has been guided by the unspoken assumption that technology doesn’t change. Proponents of the spectrum commons are right about the fact that advances in technology since the early days have been so dramatic – the analog to digital transition by itself is staggering – that the assumptions that reigned in the 1930s are no longer relevant. Almost right, that is.

In a world of analog devices, the logic for stove-piping spectrum into separately owned and operated frequencies with guard bands in between and reuse governed by power limits, propagation models, and the effectiveness of filters was compelling. We’re transitioning to a regime in which most uses will be digital (not all, there are always going to be some things like radio astronomy where a purely digital approach doesn’t work,) but we’re not there yet. In the interim, analog rules about power limits and frequencies are still extremely relevant.

The analog rules will continue to be relevant in the future, but in a modified form. Digital communication over the air still has an analog component, and assumptions about the analog part of the picture in terms of interference and reuse, all all over the current crop of wireless communication standards. Wi-Fi access points don’t coordinate with each other, except in the very minimal ways that APs announce connections by older devices to each other. They don’t negotiate with each other about the sharing of common frequencies, they simply try to avoid frequencies that carry a lot of traffic.

The load-sensing logic in Wi-Fi access points is typically less than ideal. If you think about how to go about assessing the load on a set of Wi-Fi channels would begin by counting the packets per second on each of the relevant channels and then going to the least-heavily-loaded one, and then periodically re-checking for serious changes in conditions. That’s a common technique for enterprise-grade Wi-Fi systems such as the ones sold by Cisco, Aruba, and Trapeze (Meru does it in a completely different way) but it’s foreign to consumer-grade Wi-Fi routers, which tend to count the number of Access Points on each channel, or even worse, to measure analog energy on each channel.

Why so primitive? The early Wi-Fi standards didn’t have a way for an AP to change channels without disrupting in-progress communication, so the load assessment had to try and guess which channel was likely to be most loaded at the worst time of the day rather than simply measure the one that was most heavily loaded at the time when the measurement was taken. The idea was that an AP might be installed by a professional during the 9-5 workday that had to function well during prime time. It makes a certain amount of sense, accepting the limitations on a more dynamic approach.

The current crop of open spectrum standards don’t include coordination, bargaining, and scheduling, and they don’t motivate users to be efficient. This oversight can be overcome, but it’s hard.

In the meanwhile, open spectrum approaches emphasize the hunt for unused spectrum, and even for that to be effective, the systems need the ability to recalibrate as conditions change. Neither of these things, bargaining and recalibration, is impossible, but there needs to be motivation for adding a layer of complexity to wireless systems to make them happen.

Getting back to the spectrum map, if we had wireless systems that were fully capable of bargaining and recalibration, we would have a way to transition from the current status quo of swiss cheese spectrum assignments to a world where the spectrum map is much more uniform. The current map would describe primary uses, and there would be a secondary map that would kick in when the primary use wasn’t happening. Probably 100 years from now, the map will be all one color and the FCC will be out of business. It’s getting there that’s the trick, as each of the historic allocations needs to be phased out one by one.

And what happens to spectrum that’s been sold at auction to a good-faith bidder who’s counting on the ability to use it to make money, repay the auction costs, cover equipment costs, and the like? They can’t be left out in the cold, of course.

Opportunistic systems and frequency avoidance don’t get us where we need to be, however. So rather than declaring all spectrum allocations obsolete – which is simply jumping the gun – the open spectrum approach would be more credible if it declared the intention of making them obsolete. That would generate some real progress and not prevent people from getting the benefits of wireless networking today.