EU Parliament Avoids Disaster

The last blog post covered the similarities between the recently approved EU open Internet framework’s “specialized services” exception and the FCC’s comparable “Non-BIAS Data Services” exception to its 2015 Open Internet Order. I found that neither exceptions permits “Internet fast lanes” because both clearly apply only to services separate and apart from ordinary Internet access. The EU defines specialized services as “services which are not internet access services” and the FCC defines the services somewhat more loosely as “not used to reach large parts of the Internet” and subjects then to two other restrictions. Despite these efforts to clearly distinguish specialized services from generic Internet service, advocates in both the US and the EU call them “Internet fast lanes.” No technical justification for specialized services can stand up to a non-factual criticism, so I won’t go any farther down that path.

Europe’s net neutrality advocates supported four amendments to the regulations, best described in a Medium post by Stanford Law School professor Barbara van Schewick. During the development of the FCC’s 2015 order, van Schewick had more than 150 meetings at Congress, the FCC, and the White House regarding the order’s construction. Consequently, it’s worthwhile to see how she understands the issues as that understanding had an outsized role in shaping the regulations we all live under when we use the Internet in the US. The four amendments crystallize the essence of her views, so I’ll walk through them one by one.

Amendment 1, Specialized Services: “The Parliament should adopt amendments that refine the definition of specialized services to close the specialized services loophole and keep the Internet an open platform and level playing field.”

This is less than helpful because it doesn’t propose statutory language. Given the withering criticism of the current exception (“The proposal allows ISPs to create harmful fast lanes online…fast lanes would crush start-up innovation in Europe and make it harder for European start-ups to challenge dominant American companies…fast lanes would harm all sectors of the economy…”) and its actual text, telling the EU Parliament to “just go and fix it” is cowardly. It’s easy to criticize, but harder to lay out a clear statement about what the regulations should say.

Van Schewick has never been bashful about telling the FCC what to do – 150 meetings after all – so it’s hard to comprehend her sudden modesty unless it reflects the difficulty of defining an exception that serves the Internet’s interest and placates critics at the same time. Perhaps it’s simply an impossible mission and any exception, no matter how narrow, will be blasted for its corrosive effects on all sectors of the economy.

Amendment 2, Zero-Rating: “The parliament should adopt amendments that make it clear that member states are free to adopt additional rules to regulate zero-rating. This would bring the proposal in line with the negotiators’ stated intent and empower individual member states to address this harmful practice in the future.”

Again, the proposal is too cowardly. Given that van Schewick’s analysis of zero-rating claims: “Zero-rating is harmful discrimination…The proposal does not clearly ban zero-rating…Zero-rating results in discrimination…Zero-rating distorts competition…Zero-rating harms users…Zero-rating harms innovation and free speech…”, it’s absurd not to propose an amendment “clearly banning” it. Proposing that the matter should be punted to the member states suggests van Schewick doesn’t really believe her own analysis. But this is a question of economics, so there’s nothing more to be said about it.

Things get interesting in the final two amendments.

Amendment 3, Class-based differentiation: “The Parliament should adopt amendments that prohibit ISPs from differentiating among classes of applications unless it’s necessary to manage congestion or maintain the security or integrity of the network.”

This is where van Schewick goes totally off the rails. She acknowledges that applications (and users) have different service quality needs from the network, but doesn’t explore the implications of that fact well enough; she says “For example, Internet telephony is sensitive to delay, but e-mail is not, so an ISP could give low delay to Internet telephony, but not to e-mail,” but then goes straight to the fear factor by highlighting all the ways ISPs could use differentiation power to go wrong.

The major error here is the failure to realize that ISPs and the routers they use do not have the ability to provide an infinite number of customized services to applications; they do however have a very real ability to classify applications into a small number of classes (on the order of 2 to 50) and treat their traffic accordingly. You can’t argue that differentiation is good and classification for the purpose of differentiation is bad.

She produces a long parade of horribles, beginning with ISPs potentially withholding differentiation for applications that compete with the ISP’s for-fee services: “an ISP could offer low delay to online gaming to make it more attractive, but it could decide not to offer low delay to online telephony because that would allow Internet telephony to better compete with the ISP’s own telephony offerings.”

I think she may have a point here, insofar as I haven’t seem US ISPs clamoring to offer a for-fee VoIP quality booster service to either their customers or to services such as Vonage and Skype. But it’s hard to determine that the absence of this service offering is an indictment of the ISPs, or of the advocates who denounce “fast lanes,” or a response to the lack of willingness of VoIP providers to may additional interconnection fees. Even insanely profitable services such as Netflix complain about relatively low fees for interconnection.

She also highlights various kinds of inadvertent misclassification, some obscure and others historical issues that have been resolved:

- UK ISPs throttle P2P, and this caused problems for on-line gamers in the past;

- ISPs in Canada used deep packet inspection to throttle P2P during primetime, but didn’t distinguish P2P streaming from its more common uses;

- Van Schewick claims classification can only be triggered by deep packet inspection and therefore can’t identify encrypted streams.

Nobody ever said traffic classification was easy, but the constructive approach to a complex engineering process that has bugs is to repair it rather than ban it. We can’t very well complain about ISPs refusing to accelerate VoIP if acceleration is unlawful. The UK case was resolved by a roundtable of ISPs and gaming companies, as she mentions.

It’s not hard to imagine an Internet standard by which applications can explicitly tell ISPs how to classify traffic; there are already three of them, called the Internet Protocol Service Classes, Integrated Services, and Differentiated Services. No invention necessary, all that’s missing is regulator permission, a business demand, and revised interconnection agreements. We have the technology.

We could also improve the process by which applications make their service needs known to ISPs that covers the fear that startups don’t have the resources to work with ISPs for mutual benefit. It can simply be automated, as peering requests are today. The point is that classification is done today by algorithms, not by committees who scheme about how to screw users; there may be such committees, but there are also algorithms. The Internet is large.

Some of her arguments are simply frivolous:

File uploads are generally considered not to be sensitive to delay. If you are uploading your hard disk to the cloud to do a backup, you will not mind that ISPs give file uploads lower priority. But if you are a student uploading homework right before it’s due, or a lawyer filing a brief before the deadline, or an architect submitting a bid, then the speed of this upload is your highest priority.

This is a very silly point that illustrates how poor van Schewick’s understanding of traffic engineering is.The difference between the student/lawyer uploads the back is the duration and speed at which packets are offered to the network. Common management systems such as the Comcast Fair Share system easily distinguish them.

Because of the problems with encryption, traffic classification is often done on the basis of traffic signatures rather than packet contents; by “signatures” I mean the statistical distribution of packets in a flow by size, frequency, duration, and timing. Here are some tell-tale signs that encryption can’t hide:

- Email: A single transfer from one IP address to another of short duration optionally followed by one or more somewhat longer attachment chunks;

- Twitter: a very short transfer from a sending IP to Twitter; on the reverse, and periodic transfer of longer duration from Twitter to the user;

- Web page: a transfer of roughly 1.5 MB through 4 – 8 TCP connections in parallel.

- P2P file transfer: More upload than download, to and from a swarm of partners.

- P2P streaming: like P2P file transfers, but more closely synchronized in time.

- VoIP: bilateral streams of short packets on regular intervals.

- Video calling: VoIP streams combined with similar patterns of larger packets.

- Video streaming: Chunks of packets from one source to one destination.

- Disk backups: Large packet streams at low duty cycle running for a very long time.

- Homework and legal briefs: See email.

- Gaming: small upload packets combined with both very small and very large download packets, persisting for hours and often late at night.

- Patch updates: like disk backups in reverse, long-running, potentially long transfers with a low duty cycle.

So here you have a dozen traffic classes easily distinguished even when packets are encrypted. This doesn’t proved that misclassification will never happen, but it does suggest misclassification is a manageable problem; it better be, since ISP all over the world are doing it. See the BITAG report on traffic differentiation.

Van Schewick also does violence to the topic with her facile use the term “congestion” in her recommended amendment: “[EU should] prohibit ISPs from differentiating among classes of applications unless it’s necessary to manage congestion…” How are we to define “congestion” in the context of Internet traffic management? It’s not as easy as identifying a traffic jam.

Congestion, you see, depends on application requirements. VoIP needs its packets delivered within 100 – 200 milliseconds or thrown away. Netflix, on the other hand, can tolerate seconds of delay and high packet loss before the user notices anything amiss. So any moment in which an application’s service requirements are not met is a moment of congestion. And how long does the moment have to last before the ISP is justified in taking action to remedy the problem? Van Schewick has no comment on that, but her fourth amendment suggests that ISPs must only react to deep, persistent congestion.

Amendment 4, Impending Congestion: “The Parliament should adopt amendments that limit ISPs’ ability to discriminate against traffic to prevent impending congestion.”

Her fear is that ISPs can declare “impending congestion, must manage” any old time they want. This wouldn’t be bad, insofar as application needs are what they are regardless of network conditions, but she makes an odd complaint: “Since the meaning of “impending” is not clearly defined, this provision opens the floodgates for managing traffic at all times.” But don’t we need to define “congestion” first? And if we do, isn’t it rather obvious when it’s “impending?”

Each application has thresholds for desired service quality, acceptable service quality, and intolerable quality. If the service quality is going in the wrong direction at a high rate of speed, congestion is either “impending” or “happening.” This is a statistical judgment that’s within ISP competence to evaluate. In fact, it has to be because one application’s congestion is another application’s “situation normal.” So she’s complaining about the wrong term.

She’s also advocating a ban on the technical system that prevents Internet overload and assures performance today, Random Early Detection (RED). RED, and its variants Weighted RED, Adaptive RED, et al., prevent the Internet from getting into deep and massive periods of congestion by semi-randomly slowing down sending rates as data links approach capacity. But that’s not RED’s main purpose.

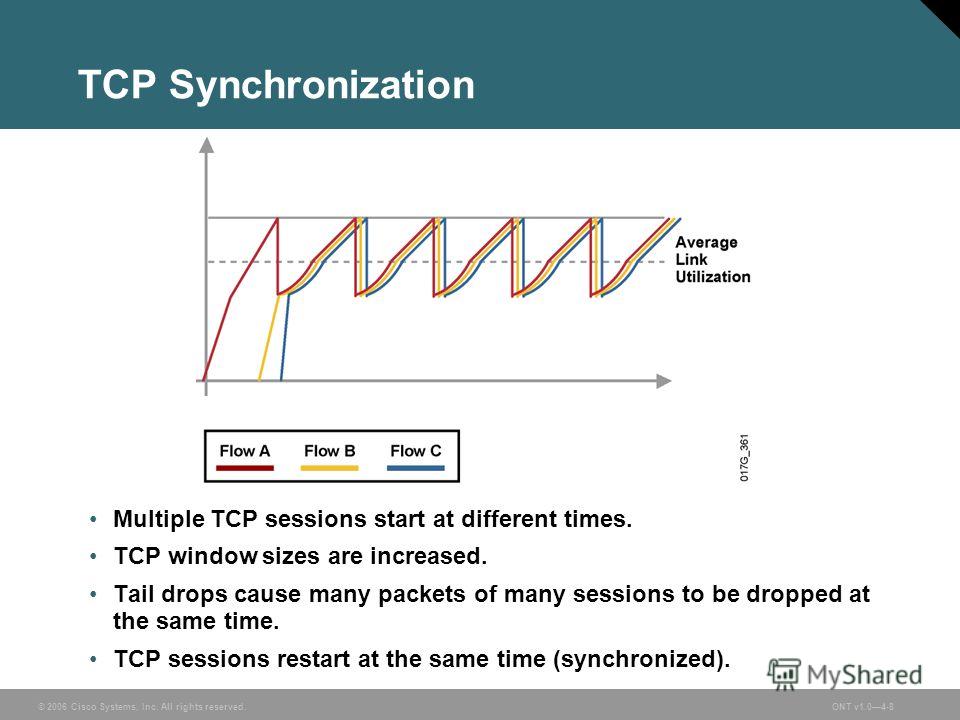

RED is carried out on ISP routers to remedy a nasty side-effect of the original Jacobson’s algorithm known as “TCP global synchronization.” Jacobson’s Algorithm makes TCP senders reduce their transmission rate when the network discards a packet. Deep congestion means very many senders lose packets at the same time and slow down. They then speed up as the congestion quickly lifts, but as traffic load is then increasing exponentially, deep congestion happens again. And the senders all slow way, way down and then they all speed up again.

This is not good because it lowers the “mean link utilization” well below its capacity. Because the senders are backing off and speeding up, average throughput is well below link capacity. So TCP is preventing TCP from working well. RED picks out streams to slow down on a random basis on hopes of raising average utilization.

So RED is a necessary management function that’s done all over the Internet well before congestion happens to most applications, and van Schewick apparently wants to ban it. She probably doesn’t mean to, but the language she’s proposed would make RED questionable.

So what we have here is a proposal that would genuinely break the Internet. It appears that the MEPs understand the Internet better than the director of Stanford Center for Internet and Society and the FCC do. That’s depressing.