Van Schewick’s Alternate History of the Internet

Professor Barbara van Schewick is as dedicated to the cause of net neutrality than anyone you’ll ever meet. While the Wheeler FCC worked on the 2015 Open Internet Order, she was all over the process:

While teaching a full load at Stanford, [van Schewick] flew to Washington almost monthly and had more than 150 meetings at Congress, the FCC, and the White House. No one individual met more often with the White House or FCC on the issue, according to public records.

She takes the repeal of the 2015 order personally, perhaps even more so than the man who signed it, Tom Wheeler. She’s so deeply involved in the issue that she appears to have lost her academic objectivity.

Alternate History

In recent work in Fortune magazine and Medium, van Schewick wastes no time in creating a fictitious history:

On Wednesday November 22, FCC Chairman Ajit Pai published his draft order outlining his plan to undo the net neutrality protections that have been in place in the U.S. since the beginning of the Internet.

The reality, of course, is that the Pai draft reverts the state of Internet regulation to exactly where it was from the time the DC Circuit struck down the 2010 Open Internet Order until the 2015 order took effect in November, 2015.

Real History

At that point, Internet Service was classified as a Title I Information Service, just as it had been since April, 1998 when the Kennard FCC wrote this in its Universal Service Report:

73. We find that Internet access services are appropriately classed as information, rather than telecommunications, services. Internet access providers do not offer a pure transmission path; they combine computer processing, information provision, and other computer-mediated offerings with data transport…

75. We note that the functions and services associated with Internet access were classed as “information services” under the MFJ. Under that decree, the provision of gateways (involving address translation, protocol conversion, billing management, and the provision of introductory information content) to information services fell squarely within the “information services” definition.147 Electronic mail, like other store-and-forward services, including voice mail, was similarly classed as an information service. 148 Moreover, the Commission has consistently classed such services as “enhanced services” under Computer II.149 In this Report, we address the classification of Internet access service de novo, looking to the text of the 1996 Act…

[h/t Brent Skorup] The Modified Final Judgment dates to 1982, and the Computer II Final Decision was passed in 1980, nearly three years before the TCP flag day that’s one of the Internet’s three birthdays. Title I is the historical norm for the classification of Internet Service.

Does the Pai Order Leave the Government Powerless?

Van Schewick claims, as do many others, that the reclassification leaves the FCC and the states powerless to protect us from abuse by ISPs:

[Chairman Pai’s] proposal would leave both the FCC and the states powerless to protect consumers and businesses against net neutrality violations by Internet Service Providers (ISPs) like Comcast, AT&T, and Verizon that connect us to the Internet.

This isn’t true either. The order takes the transparency provisions that are parts of the 2010 and 2015 orders and makes them stronger.

It can do this because the DC Circuit said it’s legitimate for the FCC to require these disclosures from Title I services. [Note: This is concerning to some because it allows the Commission to demand transparency from Internet platforms such as Facebook and Twitter, but that’s orthogonal.]

By requiring ISPs to disclose their use of blocking, throttling, and paid prioritization consumers have a way to know which are behaving in a “neutral” manner and which aren’t. This allows the FCC to sanction ISPs who engage in “secret throttling” as Comcast did in 2007.

Complaints About Blocking

The enhanced transparency rule also allows the FTC to get involved in a new way because it has authority over false and deceptive advertising. But critics say this isn’t enough because there needs to be an outright pre-emptive ban on blocking, throttling, and paid prioritization.

This remains to be proved, however. We haven’t seen examples of US ISPs blocking web sites; the leading advocacy groups pushing for net neutrality regulations can’t find any, and have to go to foreign countries to find them, places where government has ordered the blocks. US courts have ordered certain websites blocked, but only for good reason. So I have to question the need for pre-emptive regulations against a practice with no history of abuse.

If a US ISP blocks a website for anti-competitive reasons – a claim that’s always easy to make – the FTC would have no problem reversing the block and penalizing the ISP. If an ISP were to block a site for non-economic reasons, the enforcement might be problematic, but that’s far into speculation land. Sometimes things happen that are less than ideal, but that’s life. But if this sort of nuisance ever reached troublesome proportions, we do have a Congress with the power to make laws.

Complaints About Throttling

The same logic applies in the case of unfair throttling, the classic example of which is the notorious BitTorrent case the FCC examined in 2008. Net neutrality campaigners insist this was a case where an ISP was so worried that the (mostly pirated, but occasionally lawful) video transported over BitTorrent would cannibalize its video business that it simply blocked the offending protocol.

The FCC bought this rather speculative argument in its 2008 order:

For example, if cable companies such as Comcast are barred from inhibiting consumer access to high-definition on-line video content, then, as discussed above, consumers with cable modem service will have available a source of video programming (much of it free) that could rapidly become an alternative to cable television. The competition provided by this alternative should result in downward pressure on cable television prices, which have increased rapidly in recent years.

We know this argument is weak because BitTorrent still has not emerged as a significant source of video programming in the years following the FCC’s order that ostensibly protected it. In fact, YouTube, Netflix, and Amazon have emerged as cable competitors by offering licensed content from very expensive server farms. When the cost of video programming services is dominated by content costs, lower distribution costs don’t matter a great deal. BitTorrent’s advocates were actually promoting piracy.

The issue in the BitTorrent case that has lasting importance is the lack of disclosure, remedied by the FCC’s current and proposed regulations. Complaints have also been made about the throttling of wireless services that were sold as “unlimited”, but these are also transparency complaints.

In reality, no ISP wants to take the heat of being charged by advocacy groups with blocking or throttling; that sort of thing is bad for business and bad for share prices. Even though many Americans don’t have great choices for wired broadband, many more do, so market forces make a difference. The ability of consumer protests to affect share prices should not be underestimated.

Regulating Interconnection

The FCC doesn’t have regulations on the freely bargained interconnection agreements that make the Internet an internet. Regardless of the regulations on blocking and throttling in today’s Open Internet regulations, ISPs and the larger networks owned by the Big Five are free to negotiate their own terms for interconnection and traffic exchange (with the exception of the murky notion of “paid prioritization”). Van Schewick doesn’t want the FCC to get rid of this power even though it has never used it:

Finally, the FCC would lose the authority to oversee the points where ISPs like AT&T and Verizon connect to the Internet at large, which ISPs have used to slow down and extract fees from online services in the past.

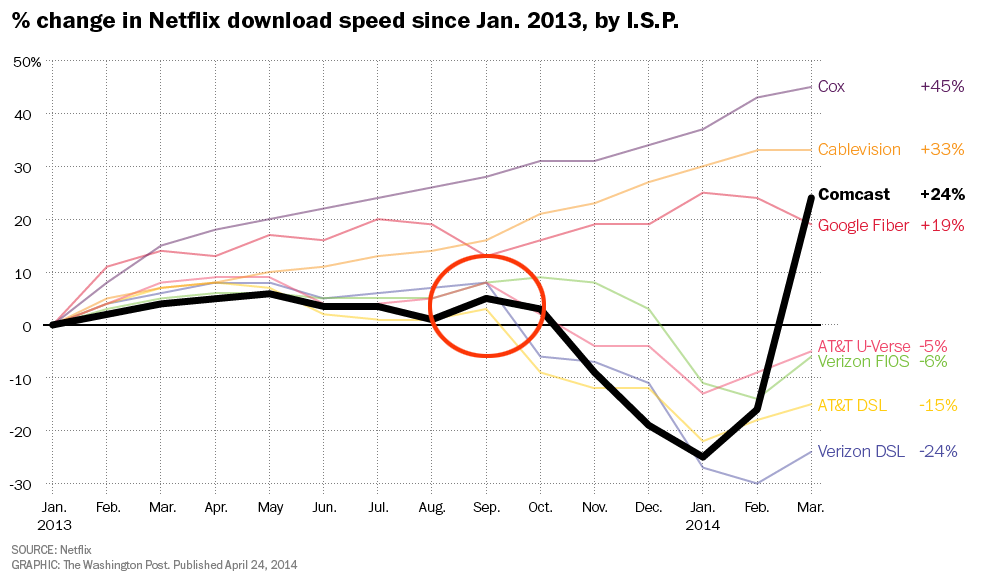

The assertion’s factual foundation is flimsy and unsourced, but it probably refers to the Netflix paid peering disputes of 2014. Netflix claimed Verizon, Comcast, and AT&T refused to peer with it – interconnect without fee. The company said that traffic from its new, private, Content Delivery Network was degraded because it had to pay transit networks such as Cogent to carry it for them:

Comcast has leveraged congestion at interconnection points to shift OVDs, including Netflix, onto paid routes into its network or direct paid interconnection agreements with Comcast.

Netflix also had the option of “paid peering” agreements with the ISPs, but it didn’t want to pay them either. The complaint was timed to coincide with the proposed merger of Comcast and Time Warner Cable, helping to scuttle the deal. It also weighed heavily on the FCC during the period when the 2015 Open Internet Order was drafted.

Netflix Created the Crisis

This peering crisis was phony. Streaming video analyst Dan Rayburn explains:

While Netflix was able to convince smaller regional ISPs to voluntarily offer settlement free peering, most large ISPs maintain national/international infrastructures, which require peering policies and consistent business practices to ensure fair and equal treatment of traffic. For the providers Netflix did not qualify for peering, Netflix moved their traffic onto very specific Internet paths that were not capable of handling their massive load and caused the congestion that impacted customers (as highlighted in Figure 2 below). In other words, if Netflix receives free peering, ISP customers receive good performance and high rankings and blogging praise from Netflix. But if Netflix does not receive free peering, ISP customers do not receive good performance and get low rankings and shame from Netflix.

When Netflix relented and signed paid peering agreements with major ISPs, it shifted its traffic to uncongested peering centers and its performance dramatically improved.

Cox, Cablevision, and Google Fiber gave Netflix free interconnection all along, but Comcast, AT&T, and Verizon required modest payments.

The credulous media not only accepted the Netflix version of the story, they touted it as proof that the FCC needed to regulate peering. The 2015 Open Internet Order asserted FCC control over peering but refrained from issuing rules:

203. At this time, we believe that a case-by-case approach is appropriate regarding Internet traffic exchange arrangements between broadband Internet access service providers and edge providers or intermediaries—an area that historically has functioned without significant Commission oversight.

This followed years of denials by FCC officials that it would ever regulate peering (see comments.)

The Myth of Inherent Neutrality

Van Schewick’s chief argument for net neutrality is the claim that the Internet was meant by it designers to be neutral, so any deviation from neutrality changes it in an impermissible way:

Since its inception, Internet access in the U.S. has been guided by one basic principle: ISPs that provide the on-ramps to the Internet should not control what happens on the Internet.

Originally, this principle was built into the architecture of the Internet. In the mid-1990s, however, technology emerged that allowed ISPs to interfere with the applications, content, and services on their networks.

This is a peculiar claim because there weren’t any ISPs at all for the first two decades of the Internet’s existence. ARPANET was a single, unified system in which there was one and only one networking provider, BB&N. After ARPANET began running TCP/IP, ARPANET was replaced by the NSFNET backbone, still not really an ISP. Most of the early users were on college campuses where they had computer terminals on their desks or in computer rooms. There was no reason for the designers of the Internet to give any thought to ISPs because they didn’t exist.

Private Backbones

The mid-’90s saw the birth of private backbones as the NSFNET was decommissioned. The architecture of the Internet had been settled since the late ’70s, before ISPs. So this is revisionist history and the Internet is not inherently neutral. Neutrality is an operational choice, not a design choice.

The Internet was different from other networks in that its endpoints – its host devices – were computers rather than telephones or teletype terminals. Because computers can run software, some of the Internet’s control system is deployed on the host devices that sit at the network edge. These computers take part in the Internet’s control, but they do this in cooperation with the Internet’s internals.

It’s not either/or, it’s both/and.

Conclusion

It’s peculiar to me that someone who has spent as much time on Internet policy as Professor van Schewick has internalized so many myths and so few facts. I can only surmise that she has depended on oral histories and personal biographies for her theory of the Internet instead of historical facts.

I wish she would spend some time unravelling the nature of network architecture, the development of the Internet out of ARPANET and CYCLADES, and the evolution of Internet law from Computer II to the MFJ to the ’98 Universal Service Report and on to the Internet Policy Statement.

Her reading of merger conditions and spectrum auctions is also too cherry-picked to help. Out of all the spectrum auctions the US has run, only one block in one auction was encumbered by neutrality conditions, but she claims that was the norm.

At some point, every advocate needs to learn something about the system lest they argue for non-productive outcomes. That’s where van Schewick is now.