The 700 MHz Device Subsidy Plan

The FCC is considering new rules for mobile devices that operate in the 700 MHz band per a Notice of Proposed Rulemaking (NPRM) titled “Promoting Interoperability in the 700 MHz Commercial Spectrum.” It’s unusual for the agency to impose rules on devices built by such firms as Apple, Samsung, Nokia and others, so there’s some sharp disagreement about whether it has the authority to do such a thing. We won’t know the answer for some time, but for the moment it’s practical to examine the proposed rules on the assumption that the FCC can find the authority.

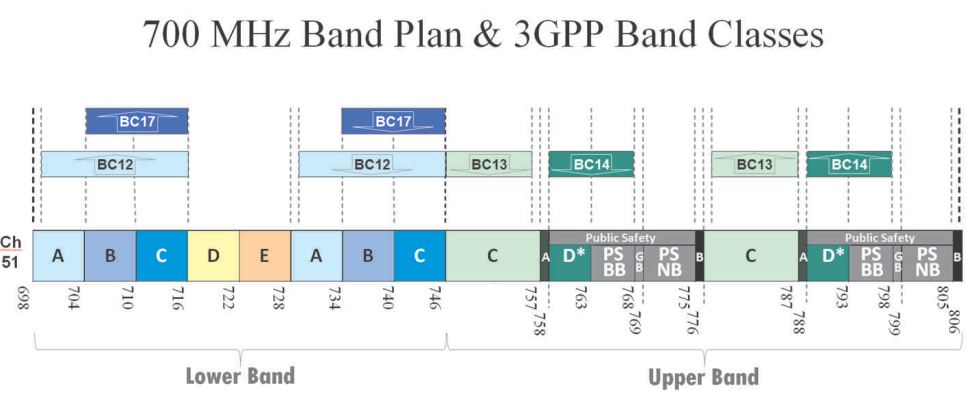

The background is somewhat complex. The FCC’s last big spectrum auction took place in 2008 when the “digital dividend” freed up some airwaves that had formerly been used by television. Digital TV channels can be placed closer together than analog channels were, so a more efficient packing scheme made this spectrum available for sale. The spectrum was arranged in five blocks, called A-E, in two ranges, low and high. Most of the blocks consisted of pairs, separated to allow transmission on one half of the pair while the other half was doing reception, but the E block was unpaired. The D block was not successfully auctioned as the FCC wished to sell a single nationwide license for it and the reserve price wasn’t met but it has since been given to public safety.

Here’s a handy map that shows how the pairing works.

Note that the A block consists of 6 MHz next to Channel 51 at 698 – 704 MHz and another 6 MHz from 728 – 734 MHz and that the E block is a single slice without a pair, and that there is C block spectrum in both the lower band and the upper band, with the upper band slices twice as wide as the lower band slices.

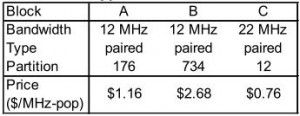

The A-C blocks sold for wildly different prices because the A and C blocks had significant restrictions: the A block was directly adjacent to active TV transmitters on Channel 51 is most urban markets, and the FCC imposed artificial net neutrality restrictions on the C block in accord with the fashion of the time. The average prices by MHz per million population (“megahertz pop” in the lingo) were:

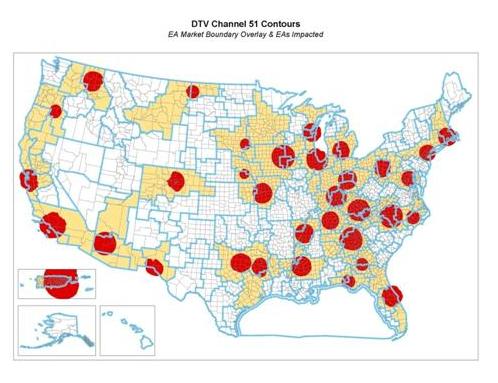

The biggest winner of B block spectrum was AT&T, the biggest winner of C block spectrum was Verizon, and the A block was mainly won by regional networks such as MetroPCS, US Cellular, and Cellular South. AT&T paid a significant premium to be free of the net neutrality rules and the interference caused by the high power TV transmitters on Channel 51 in the urban markets, and the regional carriers who could live with channel 51 got a discount. Verizon did best of all by accepting the net neutrality rules. So the assumption of flexibility played a big role in determining the auction price.

Here’s a map of the Channel 51 transmission contour.

Spectrum is harmonized around the world according to “Band Classes” devised by the 3GPP, the standards body that defines such things as LTE, the new 4G standard that’s hitting the U. S. market now in a big way and starting to appear in the rest of the world in a much smaller way. There are three band classes of interest for 700 MHz, identified in the first diagram as BC 12, BC 17, and BC 13. Note that Band Class 17 is a subset of Band Class 12 that excludes the discount A Block, and BC 13 is distinct and non-overlapping with classes 12 and 17.

At this stage, AT&T plans to resell devices conforming to Band Class 17 and Verizon to resell devices conforming to Band Class 13 (in the upper C Block ). These devices will be able to use their native, licensed networks only, which means they won’t be capable of roaming onto other networks (except insofar as these devices may support other frequencies as well.) Hence the notion of “interoperability:” 700 MHz devices will not roam or “interoperate” with other band classes and networks but the ones they’re built for.

This irritates the small carriers who bought A Block spectrum at a discount because they would like to use the same devices that AT&T and Verizon resell rather than more specialized devices tuned to their A Block frequency and also capable of roaming onto the B and C blocks. Cellular South (now known as “C Spire”) is the only regional network to offer the iPhone to its customers so the entire group of A Block winners is somewhat disadvantaged in terms of the very best devices, but there are a few Android devices adapted to their networks. MetroPCS offers LTE today with such devices. Chips are available to support Band Class 12 so there is not an insurmountable technical hurdle to building Band Class 12 devices. Making them work well is a different matter, however.

Leaving aside the question of the propriety of the FCC essentially requiring AT&T and Verizon to subsidize handsets for the A Block carriers and focusing in the technical details raises some interesting issues.

We learned from the LightSquared issue that it’s never good to be dependent on a low power signal when you have a neighbor who uses a high powered one. Even though the signals are distinguishable from each other in terms of their patterns of digital bits, the radio energy of a high power transmitter confuses receivers designed for low power signals. As radio waves decay with distance, they give off interference energy above the frequency of the original signal, and this can be significant when the power difference is great between the lower and higher powered transmissions.

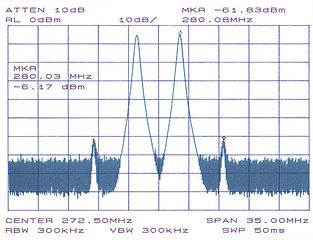

When multiple transmissions interact, receivers can experience “Inter-modulation” (IM) distortion, defined by Wikipedia as:

…the amplitude modulation of signals containing two or more different frequencies in a system with nonlinearities. The intermodulation between each frequency component will form additional signals at frequencies that are not just at harmonic frequencies (integer multiples) of either, but also at the sum and difference frequencies of the original frequencies and at multiples of those sum and difference frequencies.

If that’s not clear, here’s a diagram:

The diagram shows IM distortion as the two smaller spikes the left and right of the two big spikes that represent the signals. The IM spikes in this example are significantly stronger than the background signals represented by the more solid lines.

There are a few ways to work around IM distortion. The easiest is to raise signal power, which is accomplished in cellular systems by siting towers in a ring around the IM distortion source and by increasing the battery draw in mobile devices. There are limits to this approach because towers are expensive and cellular systems are very low power compared to those TV transmitters on Channel 51.

Engineers hired by the regional carriers seeking the device subsidy claim that three towers close to each TV tower will do the job, but AT&T’s engineers put the number closer to 12. Another way is to add filters to the devices, which raise the cost and increase the battery drain, and yet another is to use more sophisticated signal processing, which once again reduces battery life. All of these methods require extensive field testing, so there is a significant overhead in terms of the time to market for new devices.

This brings us to this question: Is it reasonable for the FCC to add expense to the smartphones that AT&T and Verizon sell, to reduce their battery life, and to delay the introduction of new devices built by Apple, the Android crowd, and the Nokia/Microsoft partnership in order to enable roaming between regional networks and national ones? “Reasonable” is in the eye of the beholder, of course.

If you’re a regional network, the proposed interoperability rule costs you nothing and impairs the users of the national networks, so you’re happy. If you’re a national network, the rule increases your costs and irritates your customers so you’re not happy. And if you’re a device manufacturer it impairs your ability to get new devices to market so you’re not happy either. The “interoperability” mandate will also stress the analog engineering skills of the device makers, and that’s an area where they don’t need any more problems. Analog engineers are in short supply, which is evident every time a smartphone shows poor antenna performance.

The cheapest and easiest way around this problem would be for the FCC to adopt the same solution they went for in the LightSquared case: They can take Channel 51 off the air. With the interference source gone, Apple can simply build all of their 700 MHz devices to function on the A, B, and upper C blocks without special testing and engineering for the A Block. If the FCC doesn’t want to do this, we’ll have to evaluate whether their reasons for keeping Channel 51 alive are more compelling than the reasons the device manufacturers have for not wanting to filter the interference it spills into the A Block.

As it stands, the A Block licensees have the power to buy as much interoperability as they want from the companies that build their smartphones. They’re going to pay higher prices for these Swiss Army knife phones than the more narrowly tailored phones used by the national carriers, but they got a deal on their spectrum.

The FCC is looking for precise estimates of the costs of an interoperability mandate, but they’re only part of the story given the rationale. The underlying assumptions seems to be that the consumer buys a smartphone and keeps it for a decade, roaming at will and changing carriers every time a great deal is available. This is clearly not the way the smartphone market works today, or you wouldn’t see people camping out at the Apple store to get the new iPhone.

I fear this is simply “Wireless Carterfone,” an attempt to re-live the glory days of 1969 when the courts and the FCC required interoperability for the telephone network. While that decision lead to cheaper and more plentiful fax machines, modems, and answering machines, it’s not really parallel to the situation we have in the rapidly-changing world of cellular technology. We have to think about how this mandate will affect the roll-out of 5G and 6G services as well as faster and better smartphones even if we can convince ourselves that it makes sense to have the national carriers subsidize the regionals.

Requiring devices to conform to band class 12 (which includes support for the A band) instead of band class 17 (which excludes support for the A band) means both networks and devices would have to cope with potential interference from channel 51 as well as from high-power transmitters in band E. This is the primary concern of the NPRM, as it should be, given that it could translate to more complex network configuration including additional sites, as well as devices that are more expensive and have poorer battery performance. It is crucial that these interference concerns be addressed before decisions are made with respect to interoperability.

[…] came across an article posted on High Tech Forum.com about one way to address the interoperability problem: eliminate Channel 51. It seems that channel […]

Peter … I agree that the FCC’s recent Interop NPRM is an important first step to help resolve the conflicting technical claims over the viability of a BC 12 device ecosystem … Is the original justification for the establishment of BC 17 still sustainable? … Is the technical predicate for BC 17 migration to BC 12 already foreseeable and, if so, within what time frame? … The licensee holders of Lower A, B and C block spectrum hold valuable spectrum which should be put to use in the near term, if possible, to meet rapidly increasing spectrum demand … We should not lose sight of the fact that A, B and C Block licensees, large and small, benefit if a BC 12 device ecosystem develops sooner rather than later … With the availability of new auction spectrum increasingly problematic, deploying network capacity on spectrum resources which have already been licensed is both logical and for some carriers the only spectrum available to meet their current broadband needs … This isn’t problem affecting only a group of smaller carriers … As you know, VZW holds 25 Lower A Block licenes covering 147,921,370 pops) and 77 Lower B Block licenses (covering 46,312,686 pops) … Creating a BC 12 device ecosystem and supporting the earliest possible nationwide deployment on BC 12 is essential to help meet the FCC’s spectrum goals in the FCC’s NBP … George

The writer is totally incorrect about “decaying radio signals” causing inter modulation distortion. This glaring error makes the entire article suspect.

I second what Mr. Leikheim said. Mr. Bennett is fundamentally confused about the nature of intermodulation interference, admittedly a difficult subject. However, writing absurd statements is not the answer.

First, in almost all cases, what is involved is more properly called “receiver-generated intermodulation” because it is actually generated in the receiver. The diagram shows what is happening INSIDE THE RECEIVER, not over the air.

Intermodulation interference requires several factors to happen simultaneously

1) a weak desired signal

2) multiple nearby and strong undesired signals

3) a specific mathematical relationship between the desired signal’s frequency and that of the undesired signals

4) a receiver that does not filter out the undesired signals

5) a nonlinearity in the receiver that creates the intermodulation when the other conditions are present. A high “third order intercept point” specification minimizes this.

Thank you for pointing to the poorly phrased sentence on radio interference. I’ve separated into into two sentences, one on decay harmonics and the other on IM. The diagram of IM and the definition of IM from Wikipedia remain intact.

Even if the error were “grave,” it’s a bit desperate to say it “makes the entire article suspect.”

As much as I really hate to be a pest, please explain the physics which support this:

“As radio waves decay with distance, they give off interference energy above the frequency of the original signal, and this can be significant when the power difference is great between the lower and higher powered transmissions.”

Let’s start with multipath. A signal appears at a receiver from two paths, one direct the other reflected, with a time offset of a quarter wavelegth. The reflected signal is a copy of the direct signal. What is the frequency distribution of the energy measured at the receiver?

A 6 mhz guard band! Too wasteful of scarce spectrum. Other services have accomodated adjacent channel television stations through careful siting and filtering of the TV transmitter to severely restrict OOBE. Channel 69 was adjacent to the 800 MHz land mobile band and interfered with base station receivers. A combination of filtering of the TV signal and use of remote receivers eventually reduced the problem to manageable dimensions. Such interference that cannot be reduced by such methods can be addressed in the handset receiver. Receiver performance has too long been ignored in the spectrum management calculus. We can no longer afford to fashion spectrum policy around the lack of filtering and poor intermod performance of receivers. These are my views and not necessarily those of my employer.

I agree that receiver performance needs to be a part of spectrum regulation going forward, but the problem is that the phrase “receiver standards” rolls off the tongue so much more easily than enforceable rules do. The lesson of the LightSquared proceeding is that protecting poorly-designed, obsolete equipment can be very expensive. John Deer ignored the rules for ten years and got away scot free while LS is in bankruptcy.

The regulations need to have some consistency, and the idea of taking Channel 51 off the air is simply that, an example of what would happen if recent precedent is applied consistently. I don’t actually want to see that, for the reasons you mention.